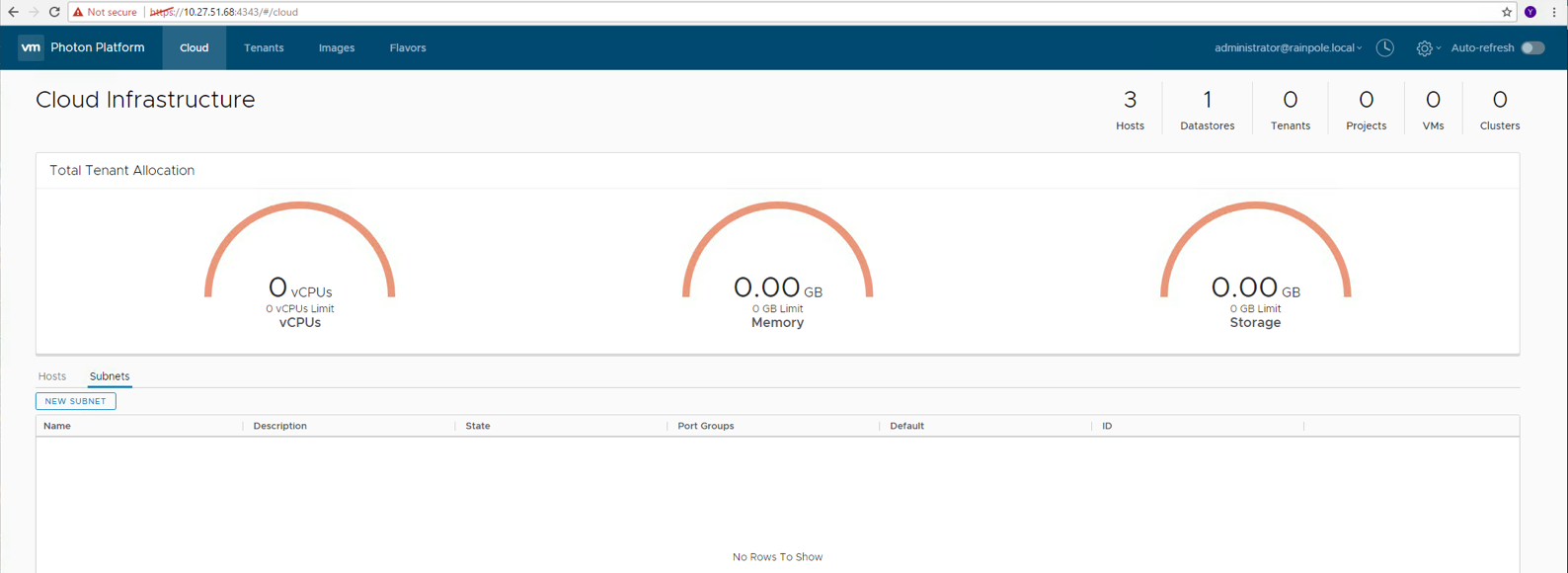

![]() To complete my series of posts on Photon Platform version 1.2, my next step is to deploy Kubernetes (version 1.6) and use my vSAN datastore as the storage destination. The previous posts covered the new Photon Platform v1.2 deployment model, and I also covered how to setup vSAN and make the datastore available to the cloud hosts in Photon Platform v1.2. This final step will use the photon controller CLI (mostly) for creating the tenant, project, image, and all the other steps that are required for deploying K8S on vSAN via PPv1.2. I’m very much going to include a warts-n-all approach to this deployment, as there was a lot of trial and error. A few things have changes with the new v1.2, especially with the use of quotas, which replaces the old resource ticket. The nice thing about quotas is that they can be adjusted on the fly, but they take a bit of getting used to.

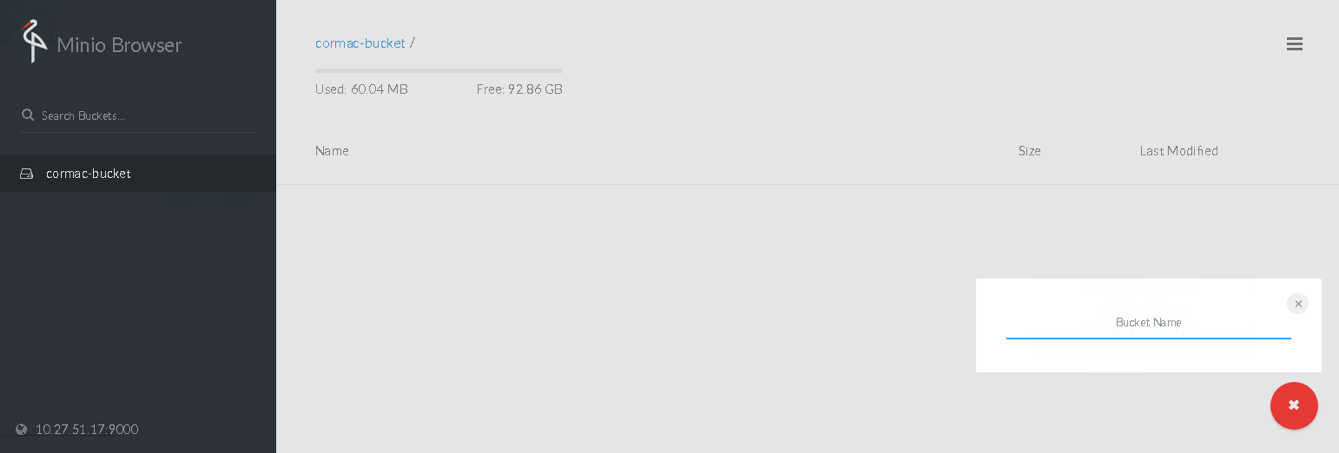

To complete my series of posts on Photon Platform version 1.2, my next step is to deploy Kubernetes (version 1.6) and use my vSAN datastore as the storage destination. The previous posts covered the new Photon Platform v1.2 deployment model, and I also covered how to setup vSAN and make the datastore available to the cloud hosts in Photon Platform v1.2. This final step will use the photon controller CLI (mostly) for creating the tenant, project, image, and all the other steps that are required for deploying K8S on vSAN via PPv1.2. I’m very much going to include a warts-n-all approach to this deployment, as there was a lot of trial and error. A few things have changes with the new v1.2, especially with the use of quotas, which replaces the old resource ticket. The nice thing about quotas is that they can be adjusted on the fly, but they take a bit of getting used to.

What you need:

- Photon Platform v1.2 should already be deployed.

- You need 3 static ip addresses for Kubernetes (K8S) VMs, a master ip, a load-balancer ip and an ip address for etcd.

- The network on which K8S VMs is deployed needs DHCP for the worker VMs.

- You need photon controller CLI installed on your desktop/laptop to run photon CLI commands.

Why not use the UI?

Yes – you can certainly do this, and I showed how to deploy Kubernetes-As-A-Service with Photon Platform version 1.1. Unfortunately there seems to be an issue with the UI in Photon Platform 1.2, where the DNS, gateway and netmask are lost on the Summary tab, so does not complete. I fed this back to the team, and I’m sure it’ll be addressed soon, so in the meantime, the CLI is the way forward (for most of what I need to do).

![]()

Step 1 – Get photon controller CLI and K8S image

You can get these from the usual place on GitHub. Deploy it to your desktop, as we will use this CLI to complete the deployment of K8S.

Step 2 – Set the target and login

Using the photon controller CLI (I am using the Mac version), set the target to your Photon Platform Load Balancer, and login. Use https/port 443 as shown here:

Cormacs-MacBook-Pro-8:bin cormachogan$ photon target set -c https://10.27.51.68:443

API target set to 'https://10.27.51.68:443'

Cormacs-MacBook-Pro-8:bin cormachogan$ photon target login

User name (username@tenant): administrator@rainpole.local

Password:

Login successful

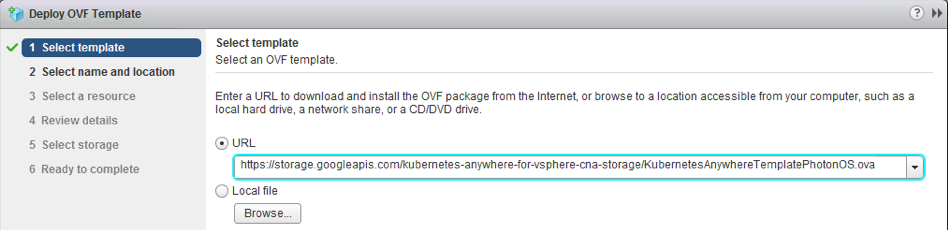

Step 3 – Upload the K8S image to PPV1.2

The assumption is that the K8S OVA has been downloaded locally from step 1. I’ll now push it up to PP using the following command, and check it afterwards.

Cormacs-MacBook-Pro-8:bin cormachogan$ photon image create PP1.2/kubernetes-1.6.0-pc-1.2.1-77a6d82.ova \

-n kube1 -i EAGER

Project ID (ENTER to create infrastructure image):

CREATE_IMAGE completed for 'image' entity 91a1bed4-1b54-4c9e-82cd-392ae3f1ae9b

Cormacs-MacBook-Pro-8:bin cormachogan$ photon image list

ID Name State Size(Byte) Replication_type ReplicationProgress SeedingProgress Scope

91a1bed4-1b54-4c9e-82cd-392ae3f1ae9b kube1 READY 41943040096 EAGER 50% 50% infrastructure

Total: 1

Step 4 – Tag the image for K8S

I tried to do this via the photon controller CLI, but it wouldn’t work for me.

Cormacs-MacBook-Pro-8:bin cormachogan$ photon deployment enable-cluster-type

2017/05/25 13:45:19 Error: photon: { HTTP status: '404', code: 'NotFound', message: '', data: 'map[]’ }

I’ve also provided feedback to the team on this. It looks like a known issue which will be addressed in the next update of photon controller CLI. Eventually, to unblock myself, I just logged onto the Photon Platform UI, and marked the image for Kubernetes that way. This is pretty easy to do; just select the image, then actions followed by “Use as Kubernetes image”.

![]()

Step 5 – Create a network for the VMs that will run the K8S scheduler/orchestration framework

This next step is needed so that the VMs that run the various K8S containers (master, etcd, local-balancer and workers) can all communicate. We’re basically creating a network for the VMs to use. I simply leveraged the default VM network in this example (which also has DHCP, as some of the K8S worker VMs pick up IPs from DHCP). This network is specified when we are ready to deploy the K8S ‘service’.

Cormacs-MacBook-Pro-8:bin cormachogan$ photon subnet create --name vm-network --portgroups 'VM Network'

Subnet Description: k8s-network

CREATE_NETWORK completed for 'network' entity 94660bdd-d1e1-45b5-8e80-8d737915d751

Cormacs-MacBook-Pro-8:bin cormachogan$ photon subnet set-default 94660bdd-d1e1-45b5-8e80-8d737915d751

Are you sure [y/n]? y

SET_DEFAULT_NETWORK completed for 'network' entity 94660bdd-d1e1-45b5-8e80-8d737915d751

Cormacs-MacBook-Pro-8:bin cormachogan$ photon subnet list

ID Name Kind Description PrivateIpCidr ReservedIps State IsDefault PortGroups

94660bdd-d1e1-45b5-8e80-8d737915d751 vm-network subnet k8s-network map[] READY true [VM Network]

Cormacs-MacBook-Pro-8:bin cormachogan$

Step 6 – Create a “flavour” for the K8S VMs

This step simply defines the resources that go to make up the VMs that are deployed to run the K8S containers. I’ve made it quite small, 1 CPU and 2GB memory. This will also be used at the command line when deploying K8S later on.

Cormacs-MacBook-Pro-8:bin cormachogan$ photon flavor create --name \

cluster-small -k vm --cost 'vm 1 COUNT, vm.cpu 1 COUNT, vm.memory 2 GB'

Creating flavor: 'cluster-small', Kind: 'vm'

Please make sure limits below are correct:

1: vm, 1, COUNT

2: vm.cpu, 1, COUNT

3: vm.memory, 2, GB

Are you sure [y/n]? y

CREATE_FLAVOR completed for 'vm' entity 898322d6-2d9d-4a84-9f41-f0f9adf76cb1

Cormacs-MacBook-Pro-8:bin cormachogan$

Step 7 – Tenant, Project and fun with Quotas

In this step, I create the tenant, the project and then select some quotas (resources). I said I would show the “warts and all” approach, so here you will see the various errors I get when I don’t get the quota settings quite right. When you create the tenant and project, you can use the –limits option to specify a quota, or you can use the ‘quota update‘ to modify the resources afterwards. I will show an update example later. Let’s create the tenant with some quota. I will set the tenant to have 100 VMs, 1TB memory and 500 CPUs. I will then create a project to consume part of those VM CPU and Memory resources. There could be multiple projects in a tenant, but in this setup, there is only one. I will then set the tenant and project context to point to these.

Cormacs-MacBook-Pro-8:bin cormachogan$ photon tenant quota set plato --limits\

'vm.count 100 COUNT, vm.memory 1000 GB, vm.cpu 500 COUNT'

Tenant name: plato

Please make sure limits below are correct:

1: vm.count, 100, COUNT

2: vm.memory, 1000, GB

3: vm.cpu, 500, COUNT

Are you sure [y/n]? y

RESET_QUOTA completed for 'tenant' entity ebdd8cd4-515d-4f71-8ce1-a7e9cb318dba

Cormacs-MacBook-Pro-8:bin cormachogan$ photon tenant quota show plato

Limits:

vm.count 100 COUNT

vm.cpu 500 COUNT

vm.memory 1000 GB

Usage:

vm.count 0 COUNT

vm.cpu 0 COUNT

vm.memory 0 GB

Cormacs-MacBook-Pro-8:bin cormachogan$ photon project create plato-prjt \

--tenant plato --limits 'vm.memory 100 GB, vm.cpu 50 COUNT, vm.count 10 COUNT’

Tenant name: plato

Creating project name: plato-prjt

Please make sure limits below are correct:

1: vm.memory, 100, GB

2: vm.cpu, 50, COUNT

3: vm.count, 10, COUNT

Are you sure [y/n]? y

CREATE_PROJECT completed for 'project' entity 7592c6f4-07f5-450f-83d7-267af1acd48e

Cormacs-MacBook-Pro-8:bin cormachogan$

Cormacs-MacBook-Pro-8:bin cormachogan$ photon tenant quota show plato

Limits:

vm.count 100 COUNT

vm.cpu 500 COUNT

vm.memory 1000 GB

Usage:

vm.cpu 50 COUNT

vm.memory 100 GB

vm.count 10 COUNT

Cormacs-MacBook-Pro-8:bin cormachogan$

Cormacs-MacBook-Pro-8:bin cormachogan$ photon tenant set plato

Tenant set to ‘plato’

Cormacs-MacBook-Pro-8:bin cormachogan$ photon project set plato-prjt

Project set to 'plato-prjt'

Cormacs-MacBook-Pro-8:bin cormachogan$

Now, what I did not include here is anything related to disk resources. Let’s do that next. I’ll add persistent disks and ephemeral disks to the tenant and its project. See if you can spot my not so deliberate mistake:

Cormacs-MacBook-Pro-8:bin cormachogan$ photon tenant quota update plato \

--limits 'persistent-disk 100 COUNT, persistent-disk.capacity 200 GB'

Tenant name: plato

Please make sure limits below are correct:

1: persistent-disk, 100, COUNT

2: persistent-disk.capacity, 200, GB

Are you sure [y/n]? y

UPDATE_QUOTA completed for 'tenant' entity ebdd8cd4-515d-4f71-8ce1-a7e9cb318dba

Cormacs-MacBook-Pro-8:bin cormachogan$ photon tenant quota update plato \

--limits 'ephereral-disk 100 COUNT, ephereral-disk.capacity 200 GB'

Tenant name: plato

Please make sure limits below are correct:

1: ephereral-disk, 100, COUNT

2: ephereral-disk.capacity, 200, GB

Are you sure [y/n]? y

UPDATE_QUOTA completed for 'tenant' entity ebdd8cd4-515d-4f71-8ce1-a7e9cb318dba

Cormacs-MacBook-Pro-8:bin cormachogan$ photon project quota update \

--limits 'persistent-disk 100 COUNT, persistent-disk.capacity 200 GB, \

ephereral-disk 100 COUNT, ephereral-disk.capacity 200 GB' 7592c6f4-07f5-450f-83d7-267af1acd48e

Project Id: 7592c6f4-07f5-450f-83d7-267af1acd48e

Please make sure limits below are correct:

1: persistent-disk, 100, COUNT

2: persistent-disk.capacity, 200, GB

3: ephereral-disk, 100, COUNT

4: ephereral-disk.capacity, 200, GB

Are you sure [y/n]? y

UPDATE_QUOTA completed for 'project' entity 7592c6f4-07f5-450f-83d7-267af1acd48e

Cormacs-MacBook-Pro-8:bin cormachogan$

Step 8 – Let’s deploy some Kubernetes

OK – I thought this would be more interesting if I tried some trial and error stuff here. I didn’t spend too much time worrying about whether I got the quotas right, and so on. I simply decided to try various options and see how it erred out. Feel free to skip down to Take #5 if you’re not interested in this approach.

Take #1: Most of this command be straight-forward I think. The only thing that might be confusing is the -d “vsan-disk”. I’ll come back to that shortly. However it looks like we’ve failed due to a lack of resources. Note that the command line is picking up the default tenant, project and network. We are specifing the vm_flavor created earlier though. Most of the rest of the command is taken up with the ip addresses of the K8S master, etcd and load-balancer.

Cormacs-MacBook-Pro-8:bin cormachogan$ photon service create -n kube-socrates -k KUBERNETES --master-ip 10.27.51.208 \

--etcd1 10.27.51.209 --load-balancer-ip 10.27.51.210 --container-network 10.2.0.0/16 --dns 10.27.51.35 \

--gateway 10.27.51.254 --netmask 255.255.255.0 -c 2 --vm_flavor cluster-small --ssh-key ~/.ssh/id_rsa.pub -d vsan-disk

Kubernetes master 2 static IP address (leave blank for none):

etcd server 2 static IP address (leave blank for none):

Creating service: kube-socrates (KUBERNETES)

Disk flavor: vsan-disk

Worker count: 5

Are you sure [y/n]? y

2017/05/25 14:37:26 Error: photon: Task 'd75ca8b9-8a09-48bf-8e94-4718702c9a73' is in error state: \

{@step=={"sequence"=>"1","state"=>"ERROR","errors"=>[photon: { HTTP status: '0', code: 'InternalError', \

message: 'Failed to rollout KubernetesEtcd. Error: MultiException[java.lang.IllegalStateException: \

VmProvisionTaskService failed with error com.vmware.photon.controller.api.frontend.exceptions.external.QuotaException: \

Not enough quota: Current Limit: vm, 0.0, COUNT, desiredUsage vm, 1.0, COUNTQuotaException{limit.key=vm, limit.value=0.0, \

limit.unit=COUNT, usage.key=vm, usage.value=0.0, usage.unit=COUNT, newUsage.key=vm, newUsage.value=1.0, newUsage.unit=COUNT}. \

/photon/servicesmanager/vm-provision-tasks/48ef339d5505951ddff41]', data: 'map[]' }],"warnings"=>[],"operation"=>"\

CREATE_KUBERNETES_SERVICE_SETUP_ETCD","startedTime"=>"1495719439272","queuedTime"=>"1495719439232","endTime"=>"1495719444273","options"=>map[]}}

API Errors: [photon: { HTTP status: '0', code: 'InternalError', message: 'Failed to rollout KubernetesEtcd. Error: \

MultiException[java.lang.IllegalStateException: VmProvisionTaskService failed with error com.vmware.photon.controller.\

api.frontend.exceptions.external.QuotaException: Not enough quota: Current Limit: vm, 0.0, COUNT, desiredUsage vm, 1.0, \

COUNTQuotaException{limit.key=vm, limit.value=0.0, limit.unit=COUNT, usage.key=vm, usage.value=0.0, usage.unit=COUNT, \

newUsage.key=vm, newUsage.value=1.0, newUsage.unit=COUNT}. /photon/servicesmanager/vm-provision-tasks/48ef339d5505951ddff41]', data: 'map[]' }]

Cormacs-MacBook-Pro-8:bin cormachogan>

I’ve hit my first error. The description is pretty good though. The issue here is that I never defined a quota for VM. Let’s address that:

Cormacs-MacBook-Pro-8:bin cormachogan$ photon tenant quota update plato \

--limits 'vm 100 COUNT'

Tenant name: plato

Please make sure limits below are correct:

1: vm, 100, COUNT

Are you sure [y/n]? y

UPDATE_QUOTA completed for 'tenant' entity ebdd8cd4-515d-4f71-8ce1-a7e9cb318dba

Cormacs-MacBook-Pro-8:bin cormachogan$ photon tenant quota show plato

Limits:

ephereral-disk.capacity 200 GB

persistent-disk 100 COUNT

ephemeral-disk 200 COUNT

persistent-disk.capacity 200 GB

ephereral-disk 100 COUNT

vm.count 100 COUNT

vm.cpu 500 COUNT

vm.memory 1000 GB

ephemeral-disk.capacity 1.024e+06 MB

vm 100 COUNT

Usage:

ephereral-disk.capacity 200 GB

persistent-disk 100 COUNT

vm 0 COUNT

ephemeral-disk 0 COUNT

persistent-disk.capacity 200 GB

ephereral-disk 100 COUNT

vm.count 10 COUNT

vm.cpu 50 COUNT

vm.memory 100 GB

ephemeral-disk.capacity 0 MB

Cormacs-MacBook-Pro-8:bin cormachogan$ photon project quota update \

--limits 'vm 100 COUNT' 7592c6f4-07f5-450f-83d7-267af1acd48e

Project Id: 7592c6f4-07f5-450f-83d7-267af1acd48e

Please make sure limits below are correct:

1: vm, 100, COUNT

Are you sure [y/n]? y

UPDATE_QUOTA completed for 'project' entity 7592c6f4-07f5-450f-83d7-267af1acd48e

Take #2: With that issue addressed, let’s try once more.

Cormacs-MacBook-Pro-8:bin cormachogan$ photon service create -n kube-socrates -k KUBERNETES --master-ip 10.27.51.208 \

--etcd1 10.27.51.209 --load-balancer-ip 10.27.51.210 --container-network 10.2.0.0/16 --dns 10.27.51.252 \

--gateway 10.27.51.254 --netmask 255.255.255.0 -c 5 --vm_flavor cluster-small --ssh-key ~/.ssh/id_rsa.pub -d vsan-disk

Kubernetes master 2 static IP address (leave blank for none):

etcd server 2 static IP address (leave blank for none):

Creating service: kube-socrates (KUBERNETES)

Disk flavor: vsan-disk

Worker count: 5

Are you sure [y/n]? y

2017/05/25 15:42:24 Error: photon: Task 'f79cfd7f-03f0-4112-b156-15939891ddcb' is in error state: \

{@step=={"sequence"=>"1","state"=>"ERROR","errors"=>[photon: { HTTP status: '0', code: 'InternalError', \

message: 'Failed to rollout KubernetesEtcd. Error: MultiException[java.lang.IllegalStateException: \

VmProvisionTaskService failed with error com.vmware.photon.controller.api.frontend.exceptions.external.\

FlavorNotFoundException: Flavor vsan-disk is not found for kind ephemeral-disk. /photon/servicesmanager/\

vm-provision-tasks/48ef339d5505a3a2cf5b0]', data: 'map[]' }],"warnings"=>[],"operation"=>"\

CREATE_KUBERNETES_SERVICE_SETUP_ETCD","startedTime"=>"1495723336805","queuedTime"=>"1495723336795",\

"endTime"=>"1495723341806","options"=>map[]}}

API Errors: [photon: { HTTP status: '0', code: 'InternalError', message: 'Failed to rollout KubernetesEtcd. \

Error: MultiException[java.lang.IllegalStateException: VmProvisionTaskService \

failed with error com.vmware.photon.controller.api.frontend.exceptions.external.FlavorNotFoundException: \

Flavor vsan-disk is not found for kind ephemeral-disk. /photon/servicesmanager/vm-provision-tasks/48ef339d5505a3a2cf5b0]', \

data: 'map[]' }]

Ah – so this is a problem with the vsan-disk option. I actually need to make a flavor for this so that it places the VMs on vSAN storage. Here is how we do that:

Cormacs-MacBook-Pro-8:bin cormachogan$ photon -n flavor create --name "vsan-disk" \

--kind "ephemeral-disk" --cost "storage.VSAN 1.0 COUNT"

167951af-8bfa-4746-b58f-810157dc4bf8

Cormacs-MacBook-Pro-8:bin cormachogan$ photon -n flavor list

167951af-8bfa-4746-b58f-810157dc4bf8 vsan-disk ephemeral-disk storage.VSAN:1:COUNT

898322d6-2d9d-4a84-9f41-f0f9adf76cb1 cluster-small vm vm:1:COUNT,vm.cpu:1:COUNT,vm.memory:2:GB

service-flavor-1 service-master-vm vm vm.count:1:COUNT,vm.cpu:4:COUNT,vm.memory:8:GB

service-flavor-2 service-other-vm vm vm.count:1:COUNT,vm.cpu:1:COUNT,vm.memory:4:GB

service-flavor-3 service-vm-disk ephemeral-disk ephemeral-disk:1:COUNT

service-flavor-4 service-generic-persistent-disk persistent-disk persistent-disk:1:COUNT

service-flavor-5 service-local-vmfs-persistent-disk persistent-disk storage.LOCAL_VMFS:1:COUNT

service-flavor-6 service-shared-vmfs-persistent-disk persistent-disk storage.SHARED_VMFS:1:COUNT

service-flavor-7 service-vsan-persistent-disk persistent-disk storage.VSAN:1:COUNT

service-flavor-8 service-nfs-persistent-disk persistent-disk storage.NFS:1:COUNT

Cormacs-MacBook-Pro-8:bin cormachogan$

Take #3: Let’s try again.

Cormacs-MacBook-Pro-8:bin cormachogan$ photon service create -n kube-socrates -k KUBERNETES --master-ip 10.27.51.208 \

--etcd1 10.27.51.209 --load-balancer-ip 10.27.51.210 --container-network 10.2.0.0/16 --dns 10.27.51.252 \

--gateway 10.27.51.254 --netmask 255.255.255.0 -c 5 --vm_flavor cluster-small --ssh-key ~/.ssh/id_rsa.pub -d vsan-disk

Kubernetes master 2 static IP address (leave blank for none):

etcd server 2 static IP address (leave blank for none):

Creating service: kube-socrates (KUBERNETES)

Disk flavor: vsan-disk

Worker count: 5

Are you sure [y/n]? y

2017/05/25 15:47:01 Error: photon: Task 'e0eff9c7-9f41-4d1d-b92d-3b5e854da717' is in error state: {@step=={"sequence"=>"1",\

"state"=>"ERROR","errors"=>[photon: { HTTP status: '0', code: 'InternalError', message: 'Failed to rollout KubernetesEtcd. \

Error: MultiException[java.lang.IllegalStateException: VmProvisionTaskService failed with error com.vmware.photon.controller.\

api.frontend.exceptions.external.QuotaException: Not enough quota: Current Limit: storage.VSAN, 0.0, COUNT, desiredUsage storage.VSAN, 1.0, \

COUNTQuotaException{limit.key=storage.VSAN, limit.value=0.0, limit.unit=COUNT, usage.key=storage.VSAN, usage.value=0.0, usage.unit=COUNT, \

newUsage.key=storage.VSAN, newUsage.value=1.0, newUsage.unit=COUNT}. /photon/servicesmanager/vm-provision-tasks/48ef339d5505a4aad7272]', \

data: 'map[]' }],"warnings"=>[],"operation"=>"CREATE_KUBERNETES_SERVICE_SETUP_ETCD","startedTime"=>"1495723613645","queuedTime"=>"1495723613626",\

"endTime"=>"1495723618649","options"=>map[]}}

API Errors: [photon: { HTTP status: '0', code: 'InternalError', message: 'Failed to rollout KubernetesEtcd. Error: MultiException\

[java.lang.IllegalStateException: VmProvisionTaskService failed with error com.vmware.photon.controller.api.frontend.exceptions.external.\

QuotaException: Not enough quota: Current Limit: storage.VSAN, 0.0, COUNT, desiredUsage storage.VSAN, 1.0, COUNTQuotaException\

{limit.key=storage.VSAN, limit.value=0.0, limit.unit=COUNT, usage.key=storage.VSAN, usage.value=0.0, usage.unit=COUNT, newUsage.key=storage.VSAN, \

newUsage.value=1.0, newUsage.unit=COUNT}. /photon/servicesmanager/vm-provision-tasks/48ef339d5505a4aad7272]', data: 'map[]' }]

Another quota issue, this time against the newly created vsan-disk. Let’s just bump up the count (and capacity while I am at it).

Cormacs-MacBook-Pro-8:bin cormachogan$ photon project quota update --limits 'storage.VSAN 100 COUNT, \

storage.VSAN.capacity 1000 GB' 7592c6f4-07f5-450f-83d7-267af1acd48e

Project Id: 7592c6f4-07f5-450f-83d7-267af1acd48e

Please make sure limits below are correct:

1: storage.VSAN, 100, COUNT

2: storage.VSAN.capacity, 1000, GB

Are you sure [y/n]? y

UPDATE_QUOTA completed for 'project' entity 7592c6f4-07f5-450f-83d7-267af1acd48e

Cormacs-MacBook-Pro-8:bin cormachogan$

Take #4. Once more into the breach:

Cormacs-MacBook-Pro-8:bin cormachogan$ photon service create -n kube-socrates -k KUBERNETES --master-ip 10.27.51.208 \

--etcd1 10.27.51.209 --load-balancer-ip 10.27.51.210 --container-network 10.2.0.0/16 --dns 10.27.51.252 \

--gateway 10.27.51.254 --netmask 255.255.255.0 -c 5 --vm_flavor cluster-small --ssh-key ~/.ssh/id_rsa.pub -d vsan-disk

Kubernetes master 2 static IP address (leave blank for none):

etcd server 2 static IP address (leave blank for none):

Creating service: kube-socrates (KUBERNETES)

Disk flavor: vsan-disk

Worker count: 5

Are you sure [y/n]? y

2017/05/25 15:50:32 Error: photon: Task '1ac181b3-fc07-4313-8fbe-d1900d8de01c' is in error state: {@step=={"sequence"=>"1","state"=>\

"ERROR","errors"=>[photon: { HTTP status: '0', code: 'InternalError', message: 'Failed to rollout KubernetesEtcd. Error: MultiException\

[java.lang.IllegalStateException: VmProvisionTaskService failed with error com.vmware.photon.controller.\api.frontend.exceptions.external\

.QuotaException: Not enough quota: Current Limit: ephemeral-disk.capacity, 0.0, GB, desiredUsage ephemeral-disk.capacity, 39.0, GB\

QuotaException{limit.key=ephemeral-disk.capacity, limit.value=0.0, limit.unit=GB, usage.key=ephemeral-disk.capacity, usage.value=0.0, \

usage.unit=GB, newUsage.key=ephemeral-disk.capacity, newUsage.value=39.0, newUsage.unit=GB}. /photon/servicesmanager/vm-provision-tasks/\

48ef339d5505a573b312a]', data: 'map[]' }],"warnings"=>[],"operation"=>"CREATE_KUBERNETES_SERVICE_SETUP_ETCD","startedTime"=>"1495723824262",\

"queuedTime"=>"1495723824251","endTime"=>"1495723829263","options"=>map[]}}

API Errors: [photon: { HTTP status: '0', code: 'InternalError', message: 'Failed to rollout KubernetesEtcd. Error: MultiException\

[java.lang.IllegalStateException: VmProvisionTaskService failed with error com.vmware.photon.controller.api.frontend.exceptions.external.\

QuotaException: Not enough quota: Current Limit: ephemeral-disk.capacity, 0.0, GB, desiredUsage ephemeral-disk.capacity, 39.0, GBQuotaException\

{limit.key=ephemeral-disk.capacity, limit.value=0.0, limit.unit=GB, usage.key=ephemeral-disk.capacity, usage.value=0.0, usage.unit=GB, \

newUsage.key=ephemeral-disk.capacity, newUsage.value=39.0, newUsage.unit=GB}. /photon/servicesmanager/vm-provision-tasks/48ef339d5505a573b312a]', data: 'map[]' }]

OK – this is going back to my deliberate mistake in step 7 – I typo’ed ephereral instead of ephemeral so I actually don’t have any ephemeral disk capacity. And my vSAN storage has been marked as kind “ephemeral”. Let’s now fix that one up:

Cormacs-MacBook-Pro-8:bin cormachogan$ photon tenant quota update plato --limits 'ephemeral-disk.capacity 200 GB'

Tenant name: plato

Please make sure limits below are correct:

1: ephemeral-disk.capacity, 200, GB

Are you sure [y/n]? y

UPDATE_QUOTA completed for 'tenant' entity ebdd8cd4-515d-4f71-8ce1-a7e9cb318dba

Cormacs-MacBook-Pro-8:bin cormachogan$ photon project quota update --limits 'ephemeral-disk.capacity 200 GB' \

7592c6f4-07f5-450f-83d7-267af1acd48e

Project Id: 7592c6f4-07f5-450f-83d7-267af1acd48e

Please make sure limits below are correct:

1: ephemeral-disk.capacity, 200, GB

Are you sure [y/n]? y

UPDATE_QUOTA completed for 'project' entity 7592c6f4-07f5-450f-83d7-267af1acd48e

Cormacs-MacBook-Pro-8:bin cormachogan$

Take #5 – Now it should work, right?

Cormacs-MacBook-Pro-8:.kube cormachogan$ photon service create -n kube-socrates -k KUBERNETES --master-ip 10.27.51.208 \

--etcd1 10.27.51.209 --load-balancer-ip 10.27.51.210 --container-network 10.2.0.0/16 --dns 10.27.51.252 \

--gateway 10.27.51.254 --netmask 255.255.255.0 -c 2 --vm_flavor cluster-small --ssh-key ~/.ssh/id_rsa.pub -d vsan-disk

Kubernetes master 2 static IP address (leave blank for none):

etcd server 2 static IP address (leave blank for none):

Creating service: kube-socrates (KUBERNETES)

Disk flavor: vsan-disk

Worker count: 2

Are you sure [y/n]? y

CREATE_SERVICE completed for 'service' entity 6be5ce48-81ff-4682-a5de-1066fb3fa316

Note: the service has been created with minimal resources. You can use the service now.

A background task is running to gradually expand the service to its target capacity.

You can run 'service show ' to see the state of the service.

Cormacs-MacBook-Pro-8:.kube cormachogan$

Success!

Right, let’s monitor the deployment while the Kubernets workers are getting deployed. We should see it go from ‘Maintenance’ to “Ready’:

Cormacs-MacBook-Pro-8:.kube cormachogan$ photon service list

ID Name Type State Worker Count

6be5ce48-81ff-4682-a5de-1066fb3fa316 kube-socrates KUBERNETES MAINTENANCE 2

Total: 1

MAINTENANCE: 1

Cormacs-MacBook-Pro-8:.kube cormachogan$ photon service list

ID Name Type State Worker Count

6be5ce48-81ff-4682-a5de-1066fb3fa316 kube-socrates KUBERNETES READY 2

Total: 1

READY: 1

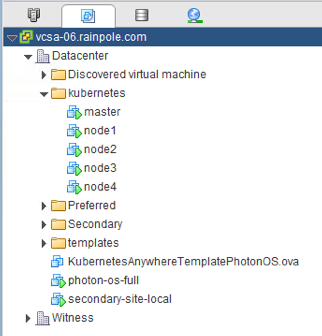

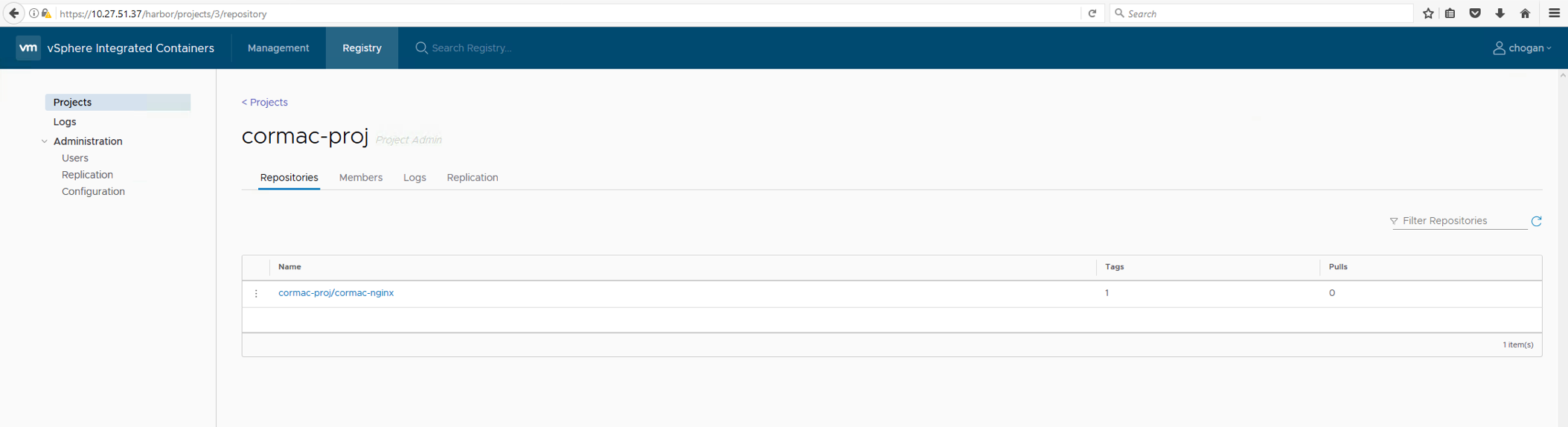

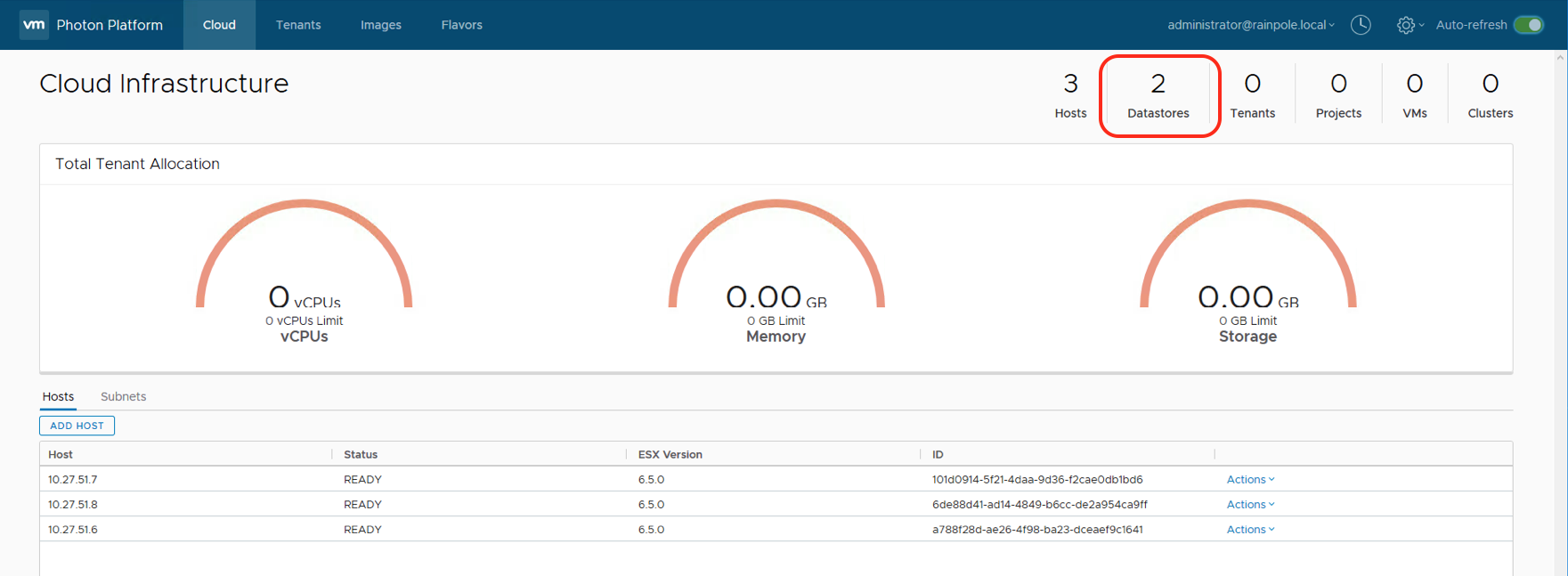

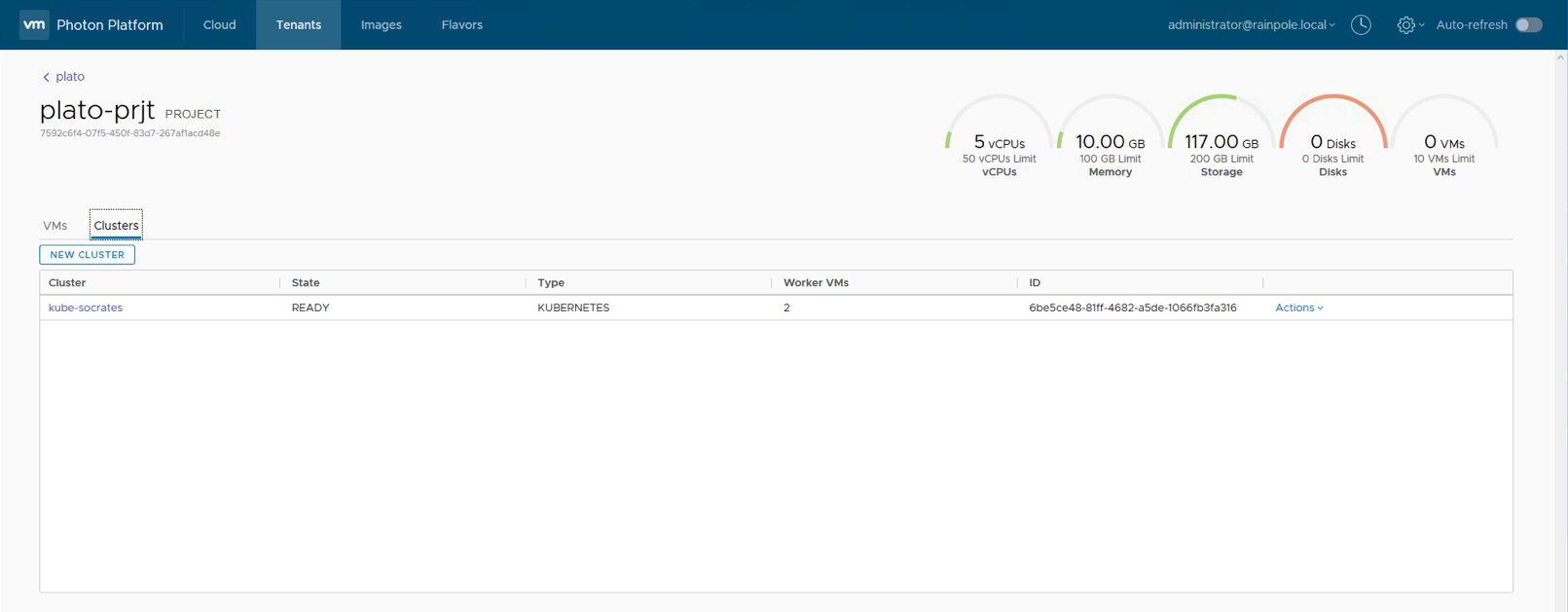

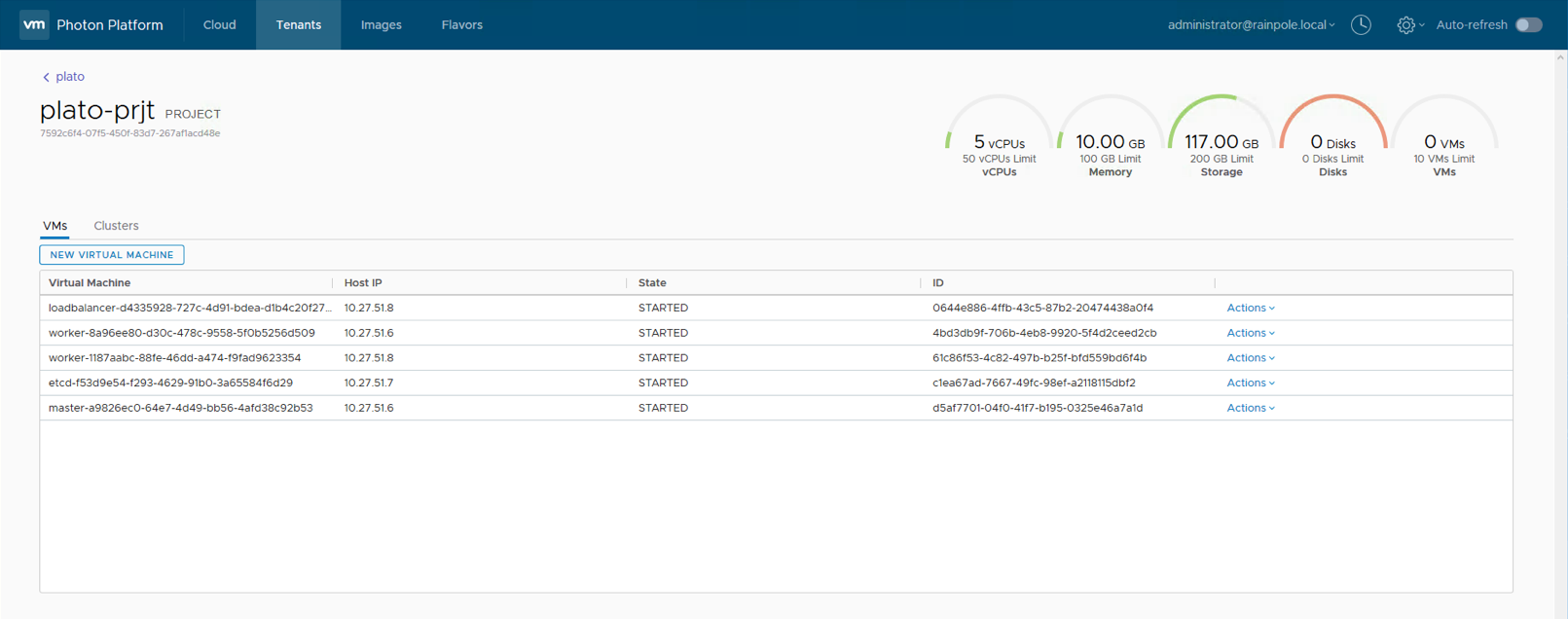

Step 9: Check out the Photon Platform Dashboard

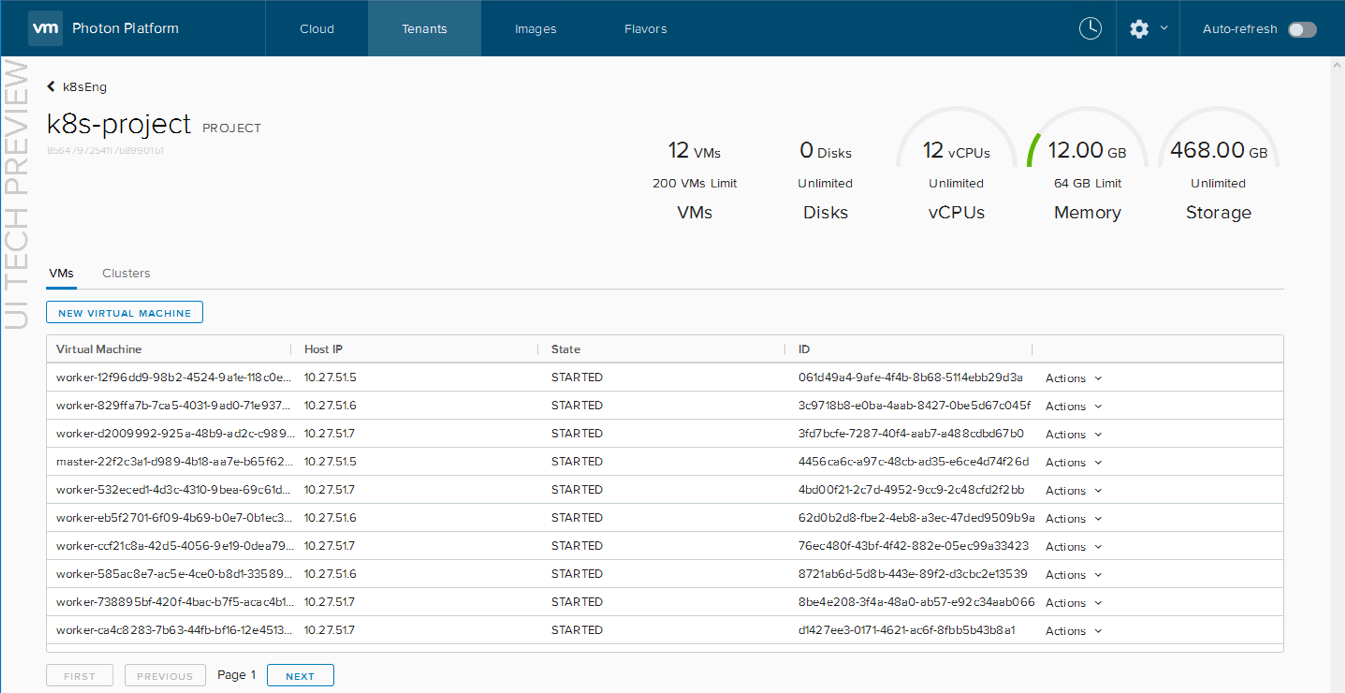

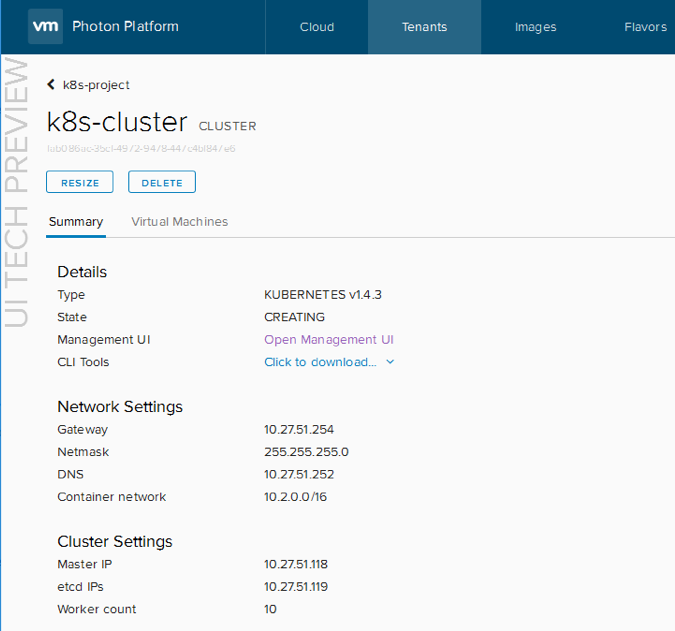

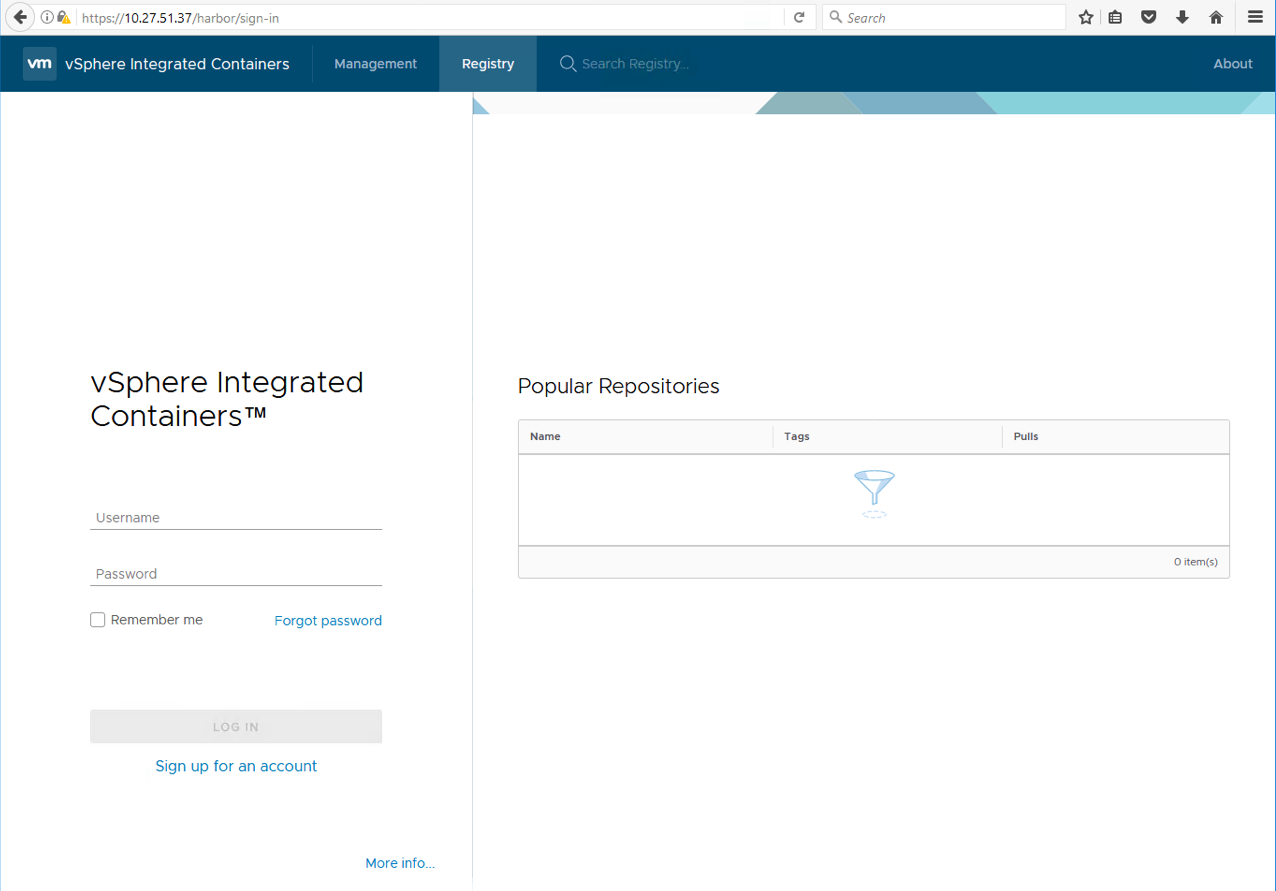

The Photon Platform dashboard can now be used to look at the new cluster and the running VMs. Here are some screenshots from my setup. the first is the Tenant > Project > Cluster view where you can see the Kubernetes service, and the second is the Tenant > Project > VMs view where you can see the VMs that we deployed to get K8S up and running:

![]()

![]()

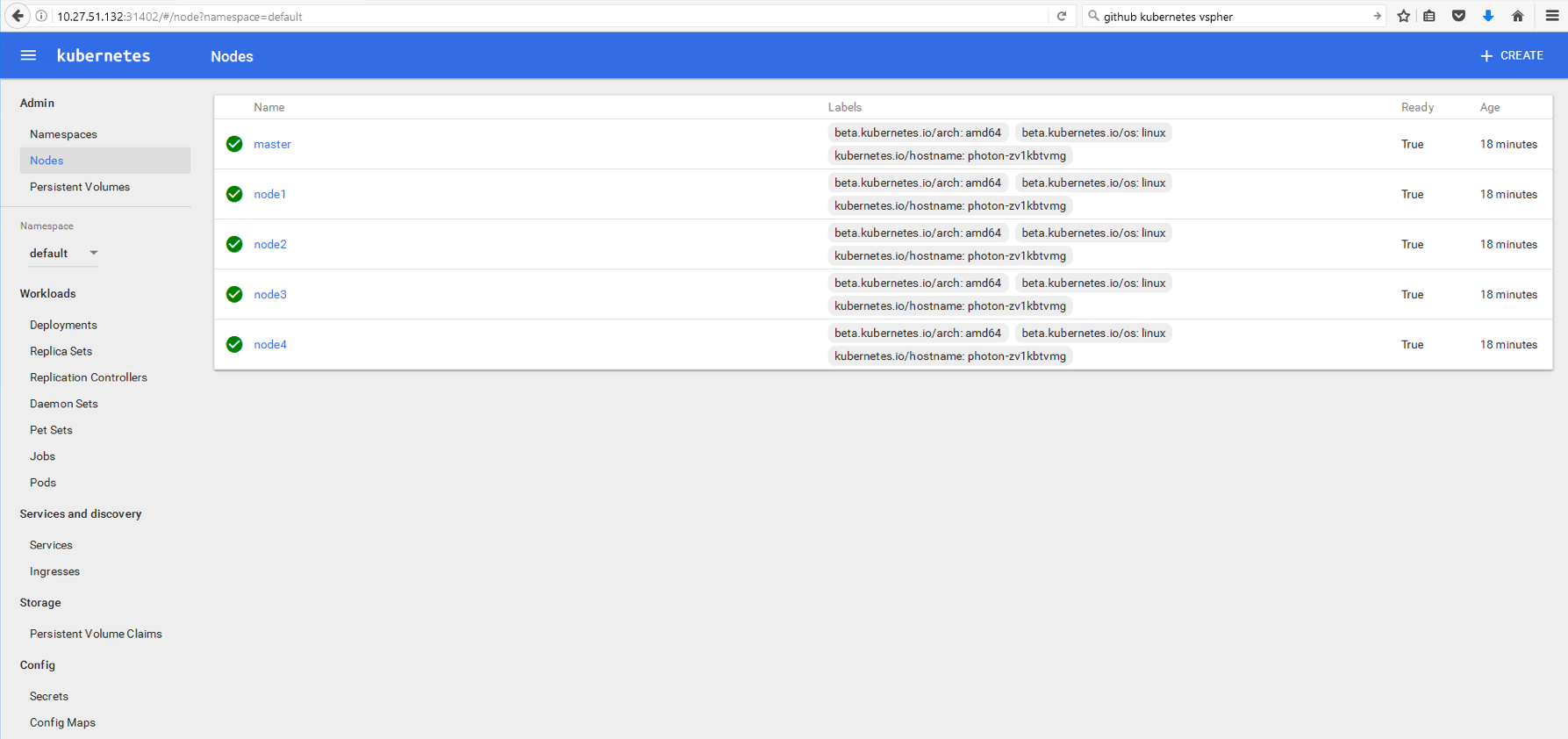

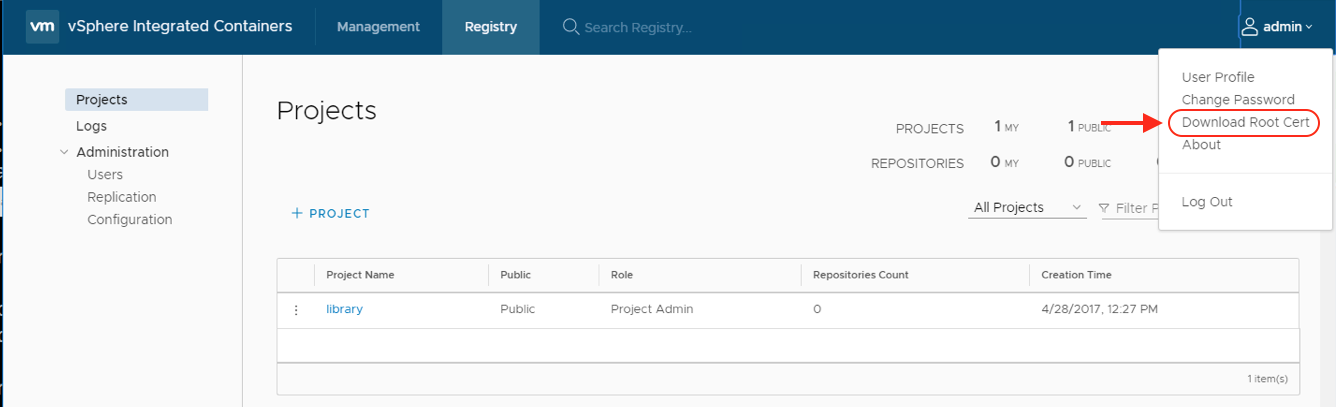

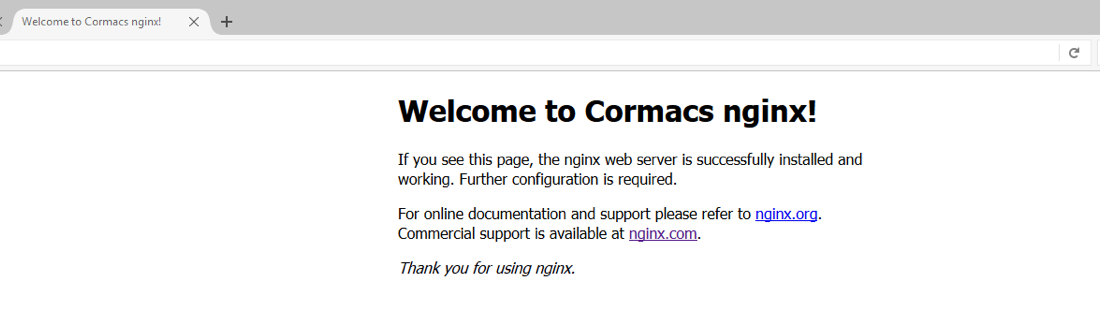

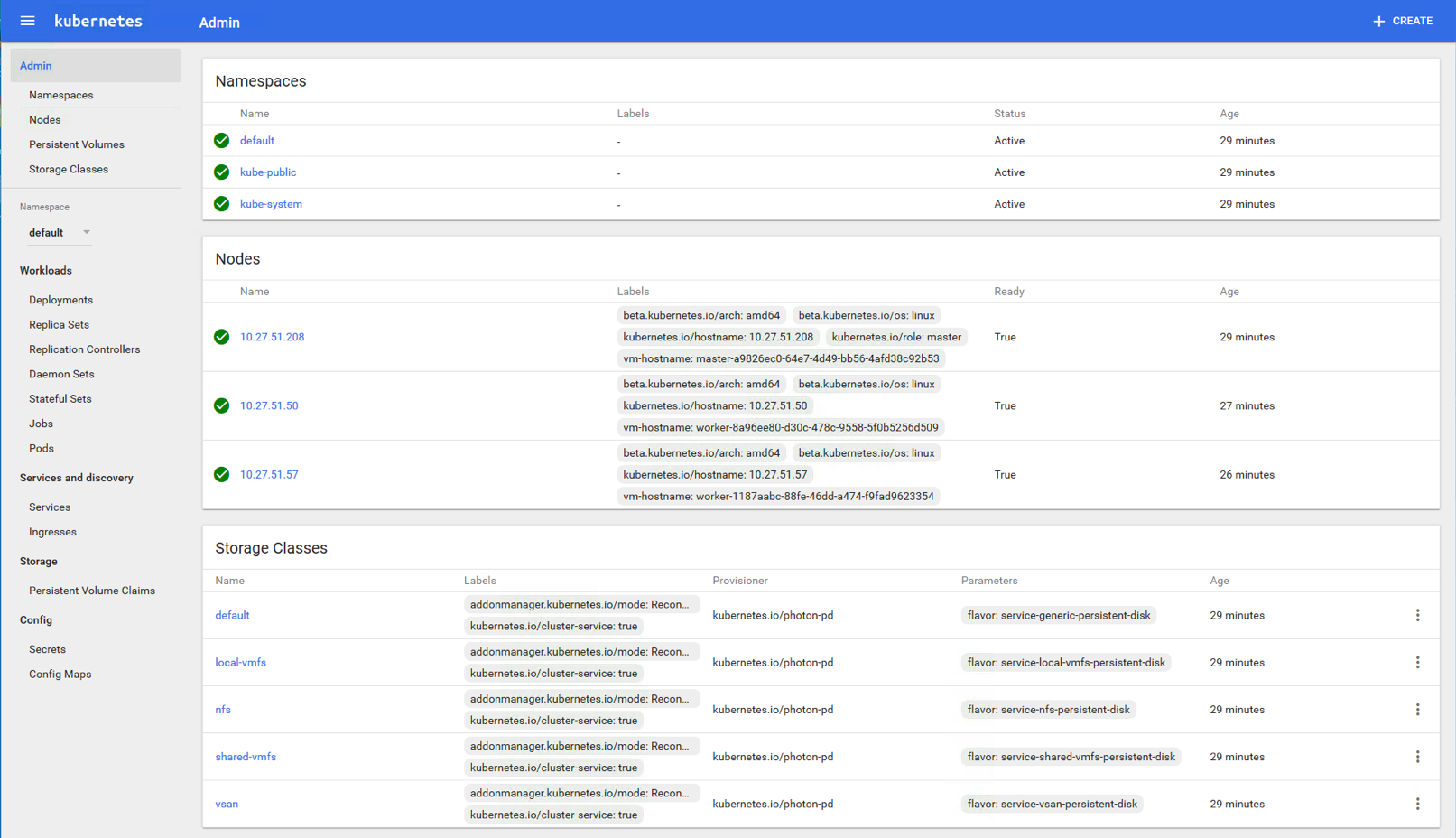

Step 10: Login to K8S

With Kubernetes now deployed, we can login to the main dashboard to make sure everything is working. Just point a browser at https://<ip-of-k8s-load-balancer:6443, and login with the credentials admin/admin. If everything is working, the Admin view should look something like this:

![]()

Summary

OK, there were a couple of hiccups. The issue with the UI missing network information, and the photon CLI command issue with ‘enable-cluster-type’. Both of these issues have been fed back to the team. Also, quotas take a little getting used to, but once you are familiar with them, they are far more flexible than the older ‘resource-ticket’. And if I took a little more time to learn about them, I would have had so much trial and error. Having said all that, it is still relatively straight-forward to deploy K8S onto a vSAN datastore via Photon Platform v1.2, even using the CLI approach.

The post Deploy Kubernetes on Photon Platform 1.2 and VSAN appeared first on CormacHogan.com.

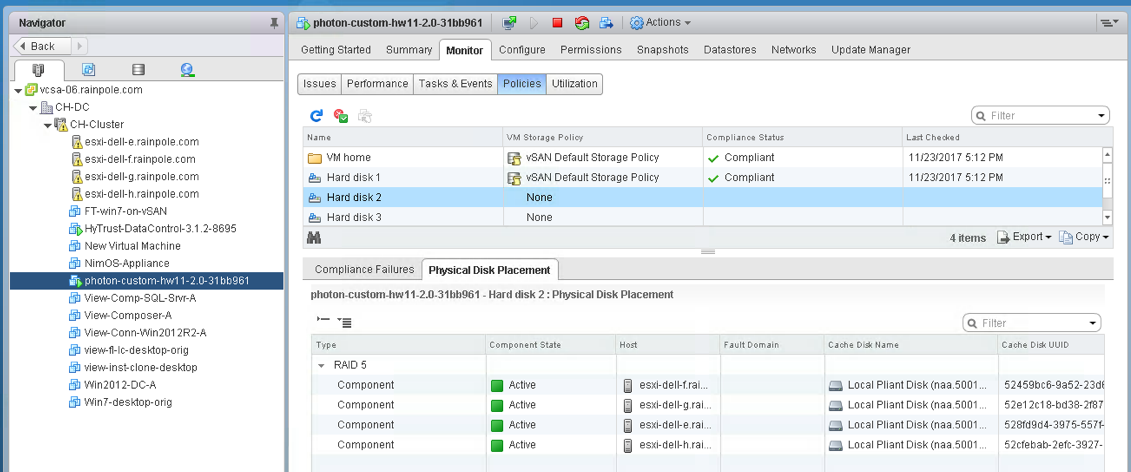

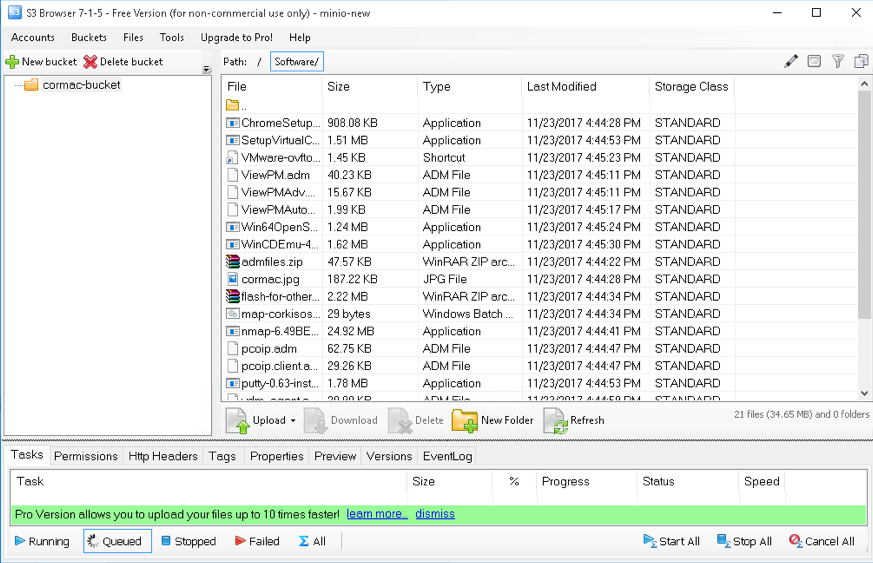

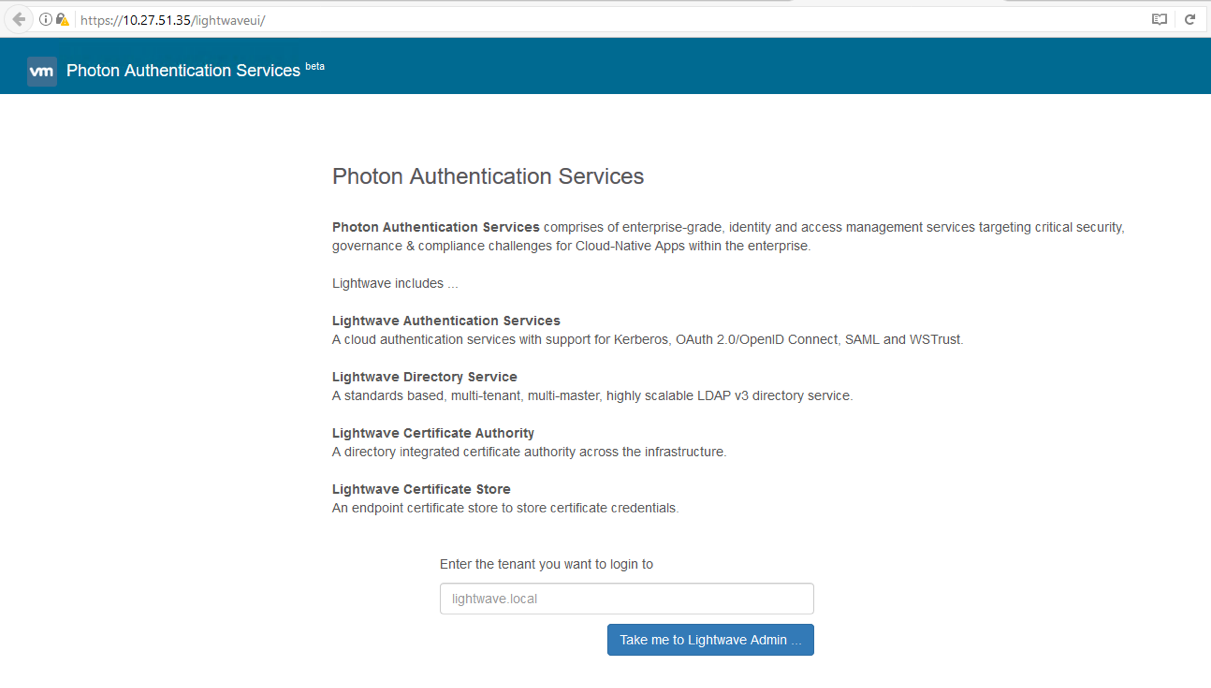

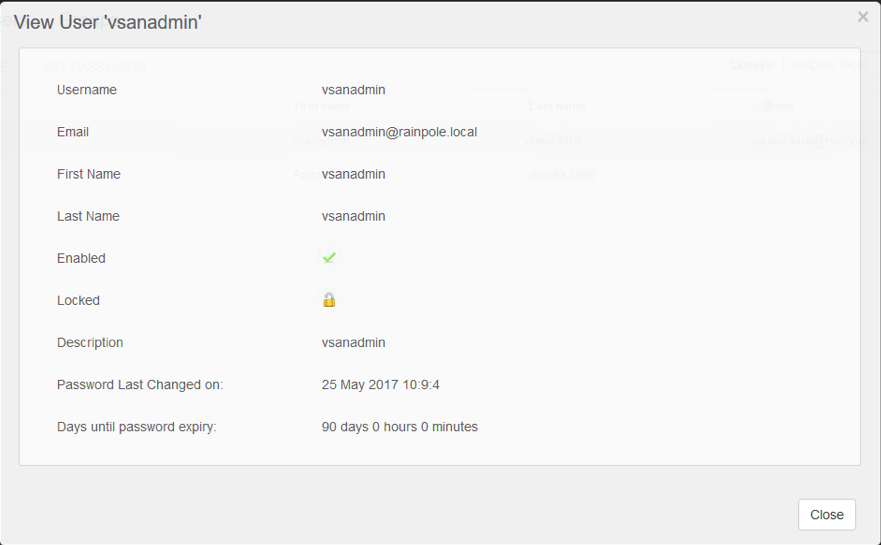

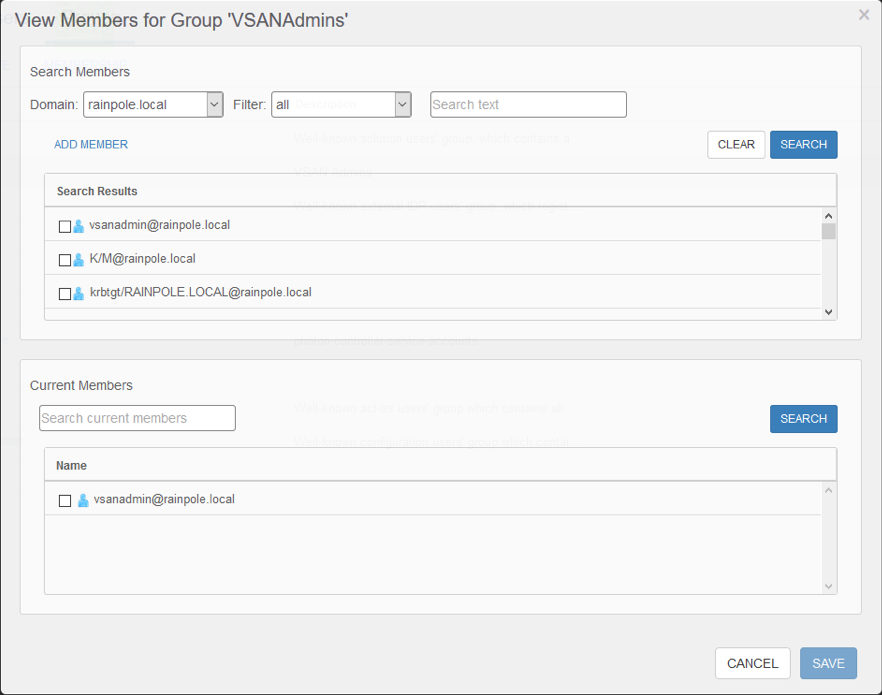

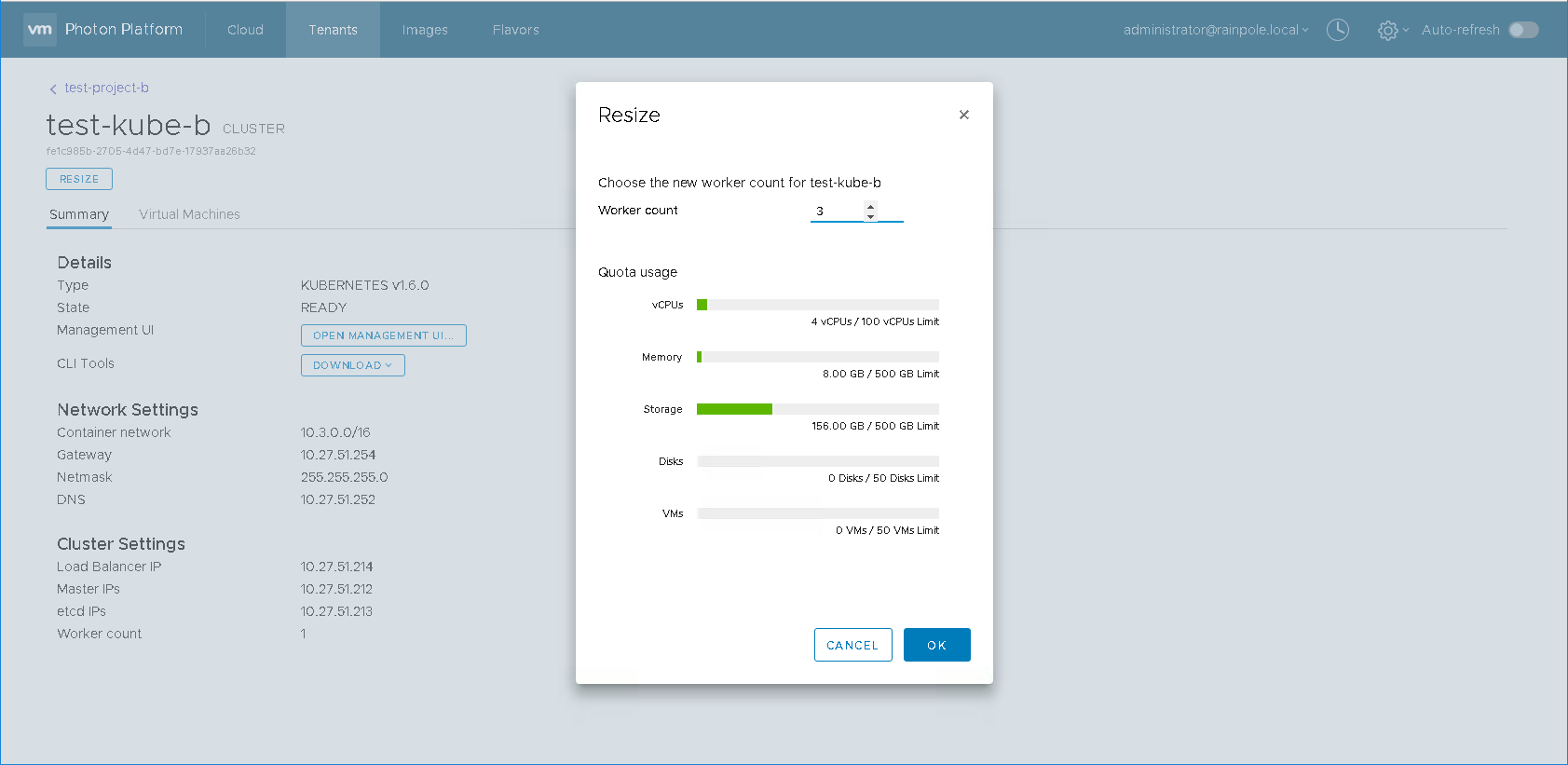

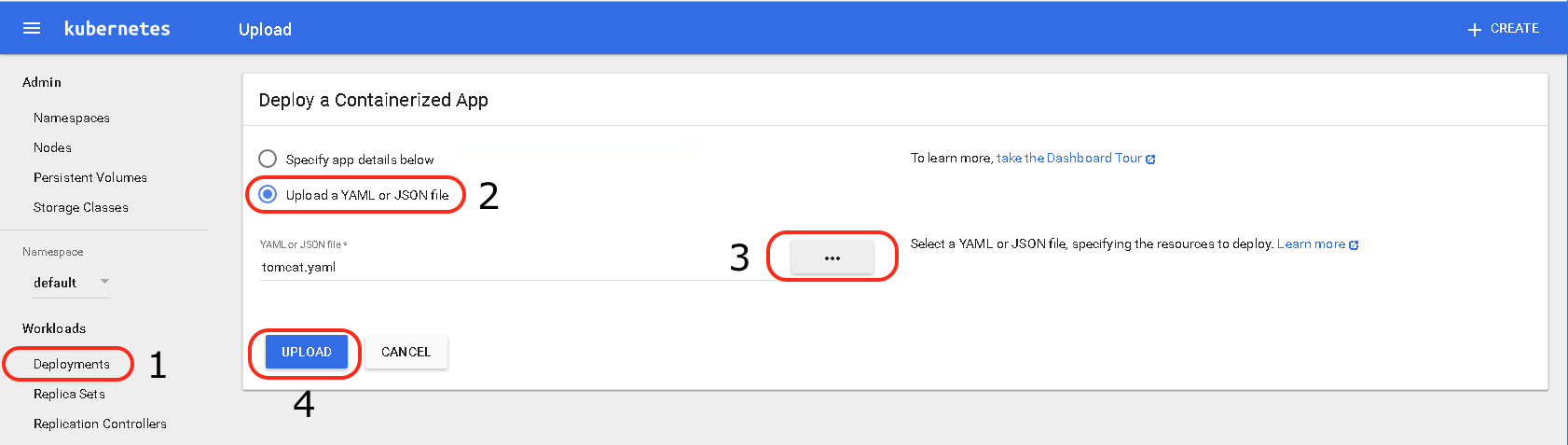

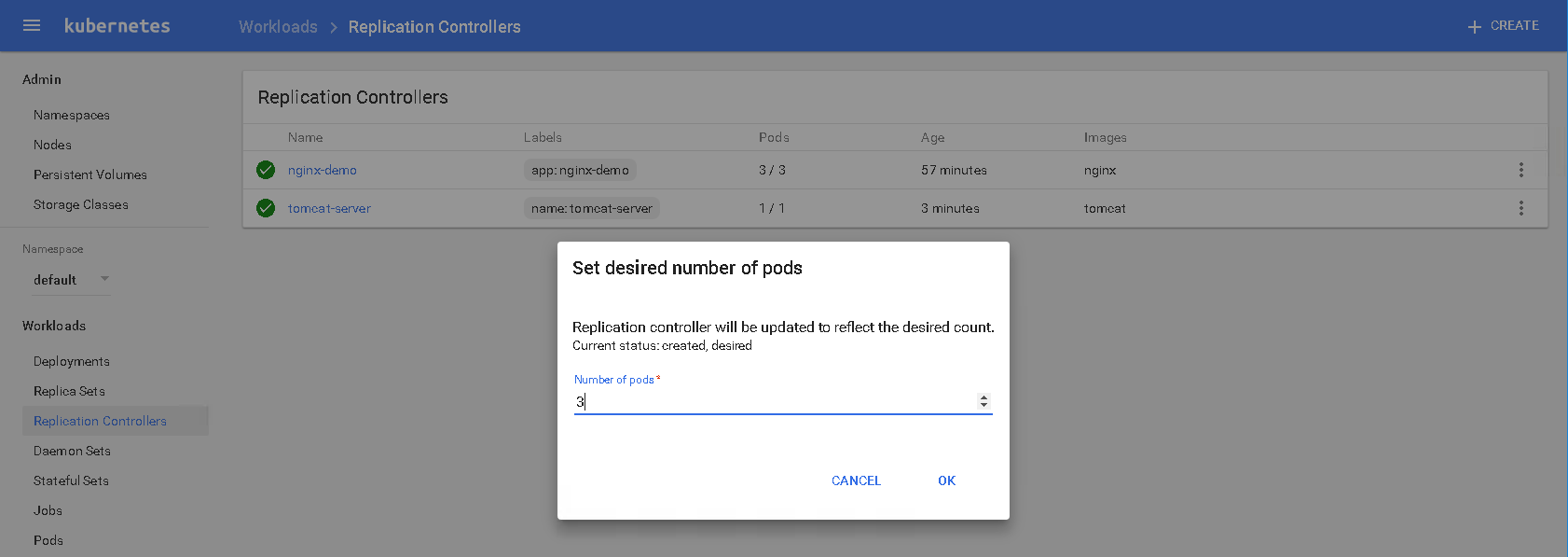

I mentioned in a previous post that we have recently released Photon Controller version 1.1, and one of the major enhancements was the inclusion of support for vSAN. I wrote about the steps to do this in the previous post, but now I want to show you how to utilize vSAN storage for the orchestration frameworks (e.g. Kubernetes) that you deploy on top of Photon Controller. In other words, I am going to describe the steps that need to be taken in order for these Kubernetes VMs (master, etcd, workers) to be able to consume the vsanDatastore that is now configured on the cloud ESXi hosts of Photon Controller. This is what I will show you in this post. I have already highlighted the fact that you can deploy Kubernetes as a service from the Photon Controller UI. This is showing you another way of achieving the same thing, but also to enable the vSAN datastore to be used by the K8S VMs.

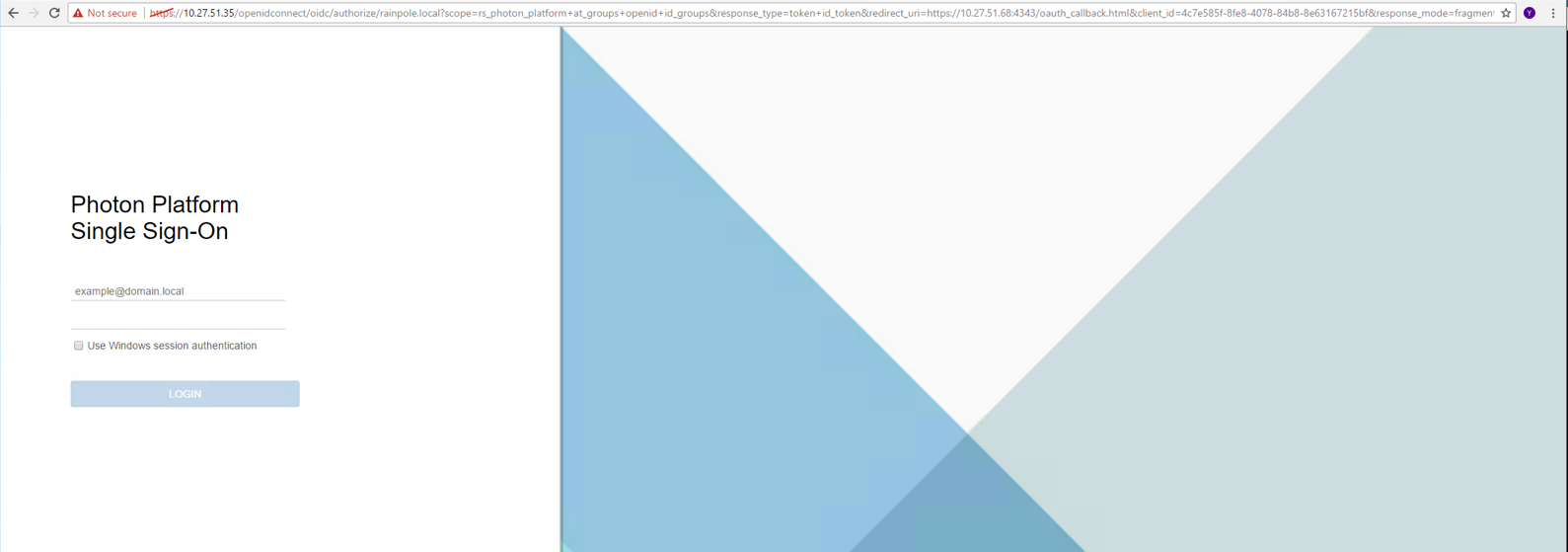

I mentioned in a previous post that we have recently released Photon Controller version 1.1, and one of the major enhancements was the inclusion of support for vSAN. I wrote about the steps to do this in the previous post, but now I want to show you how to utilize vSAN storage for the orchestration frameworks (e.g. Kubernetes) that you deploy on top of Photon Controller. In other words, I am going to describe the steps that need to be taken in order for these Kubernetes VMs (master, etcd, workers) to be able to consume the vsanDatastore that is now configured on the cloud ESXi hosts of Photon Controller. This is what I will show you in this post. I have already highlighted the fact that you can deploy Kubernetes as a service from the Photon Controller UI. This is showing you another way of achieving the same thing, but also to enable the vSAN datastore to be used by the K8S VMs. From there, click on Clusters, followed by clicking on the name of the cluster. In the summary tab, you can click on the option to Open Management UI, and this should launch the Kubernetes dashboard for you.

From there, click on Clusters, followed by clicking on the name of the cluster. In the summary tab, you can click on the option to Open Management UI, and this should launch the Kubernetes dashboard for you. The Kubernetes dashboard can then be handed off to your developers.

The Kubernetes dashboard can then be handed off to your developers.

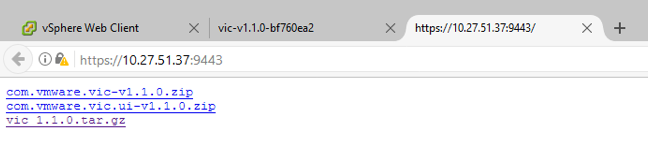

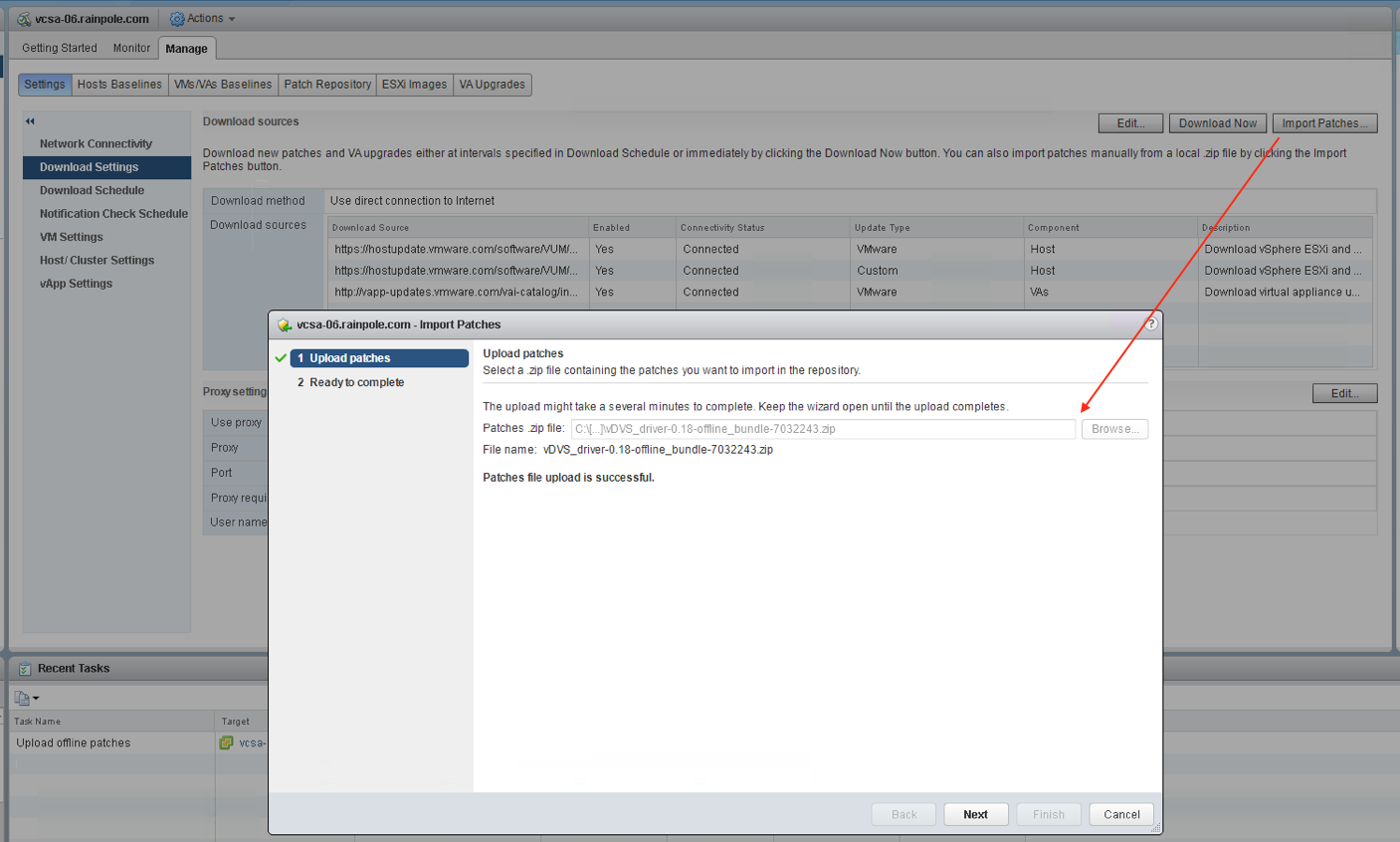

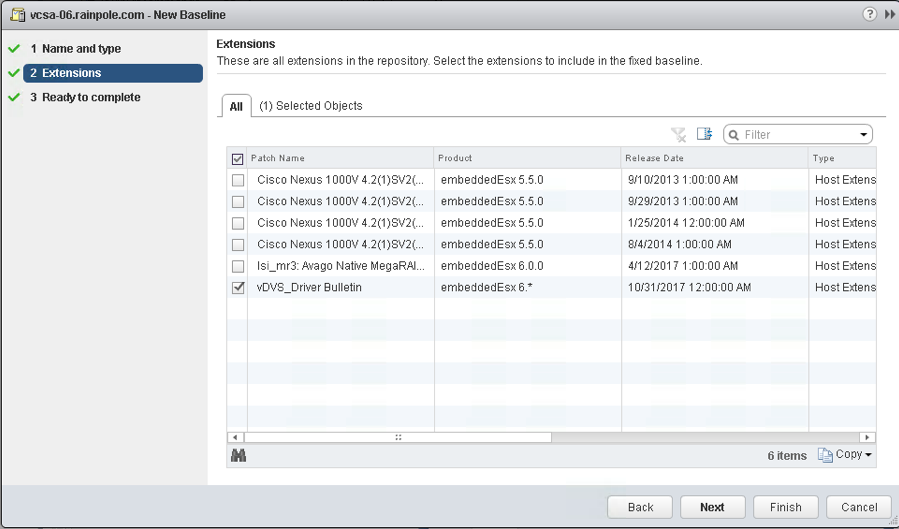

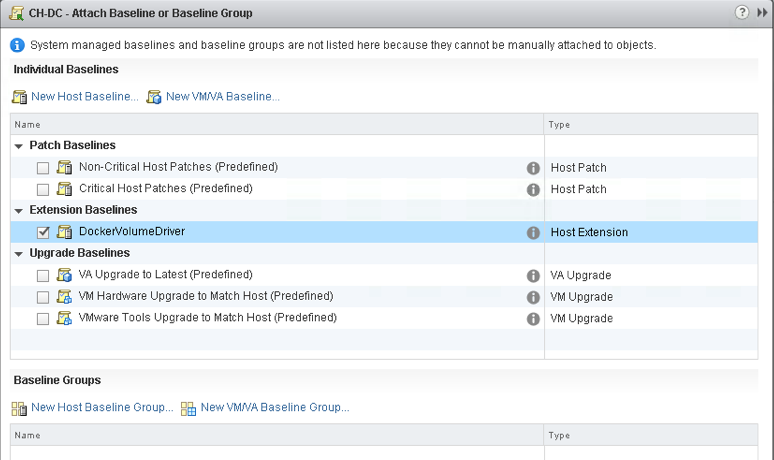

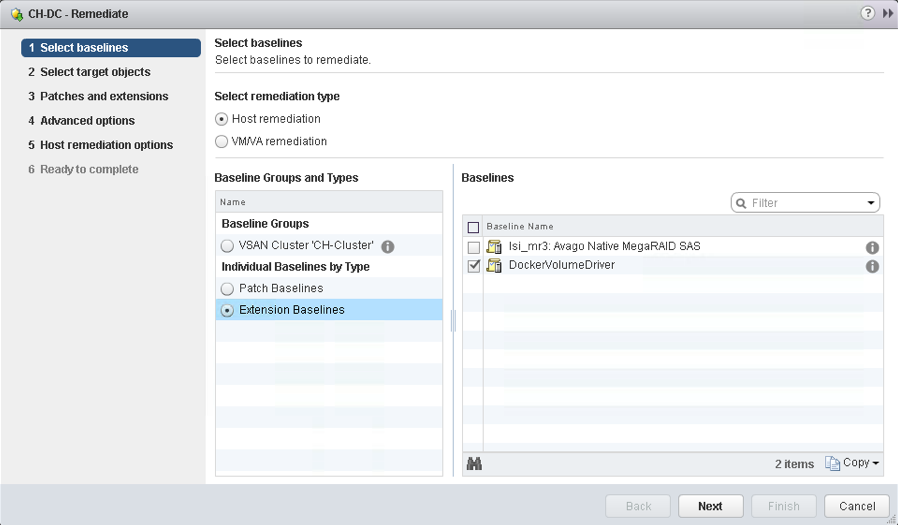

Now that the vDVS has been installed on all of my ESXi hosts in my vSAN cluster, let’s install the VM component, or to be more accurate, the Container Host component, since my Photon OS VM is my container host.

Now that the vDVS has been installed on all of my ESXi hosts in my vSAN cluster, let’s install the VM component, or to be more accurate, the Container Host component, since my Photon OS VM is my container host.