In previous posts we have looked at using a “cluster” for deploying docker swarm on top of photon controller. Of course, deploying docker swarm via the cluster management construct may not be what some customers wish to do, so now we have full support for “docker-machine” on photon controller as well. This will allow you to create your own docker swarm clusters using instructions provided by Docker. In this post, we will look at getting you started with building the docker-machine driver plugin, setting up Photon Controller, and then the setup needed to allow the deploying of docker-machine on Photon Controller.

In previous posts we have looked at using a “cluster” for deploying docker swarm on top of photon controller. Of course, deploying docker swarm via the cluster management construct may not be what some customers wish to do, so now we have full support for “docker-machine” on photon controller as well. This will allow you to create your own docker swarm clusters using instructions provided by Docker. In this post, we will look at getting you started with building the docker-machine driver plugin, setting up Photon Controller, and then the setup needed to allow the deploying of docker-machine on Photon Controller.

You can find the software and additional information on github.

*** Please note that at the time of writing, Photon Controller is still not GA ***

Step 1 – Prep Ubuntu

In this scenario, I am using Ubuntu VM. There are the commands to prep that distro for the docker-machine driver plugin for photon controller:

apt-get update apt-get install docker.io => install Docker apt-get install golang => Install the GO programming language apt-get install genisoimage => needed for mkisofs

Step 2 – Build docker-machine and the docker-machine driver plugin

Download and build the photon controller plugin for docker-machine. You can do this by first setting the environment variable GOPATH, and then running “go get github.com/vmware/docker-machine-photon-controller”. I simply set GOPATH to a newly created directory called /GO, cd /GO and then running the go get.

Once the code is downloaded, change directory to the “src/github.com/vmware/docker-machine-photon-contoller” directory and then run the “make build” command. This creates the binary in the bin directory. Finally run the command “make install” which copies the binary to the /usr/local/bin directory.

Next step is to build the docker-machine binary. The source has been pulled down earlier with the go get command, but you will need to change directory to “src/github.com/docker/machine” and run “make build” and “make install”.

Note that you do not run the docker-machine-photon-controller binary directly. It is called when the “docker-machine create” command with -d option is run, which you will see shortly. Verify that the “docker-machine” is working by running it and getting the “usage” output.

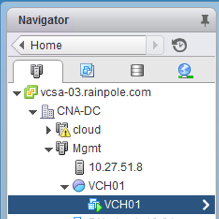

Step 3 – Get Photon Controller ready

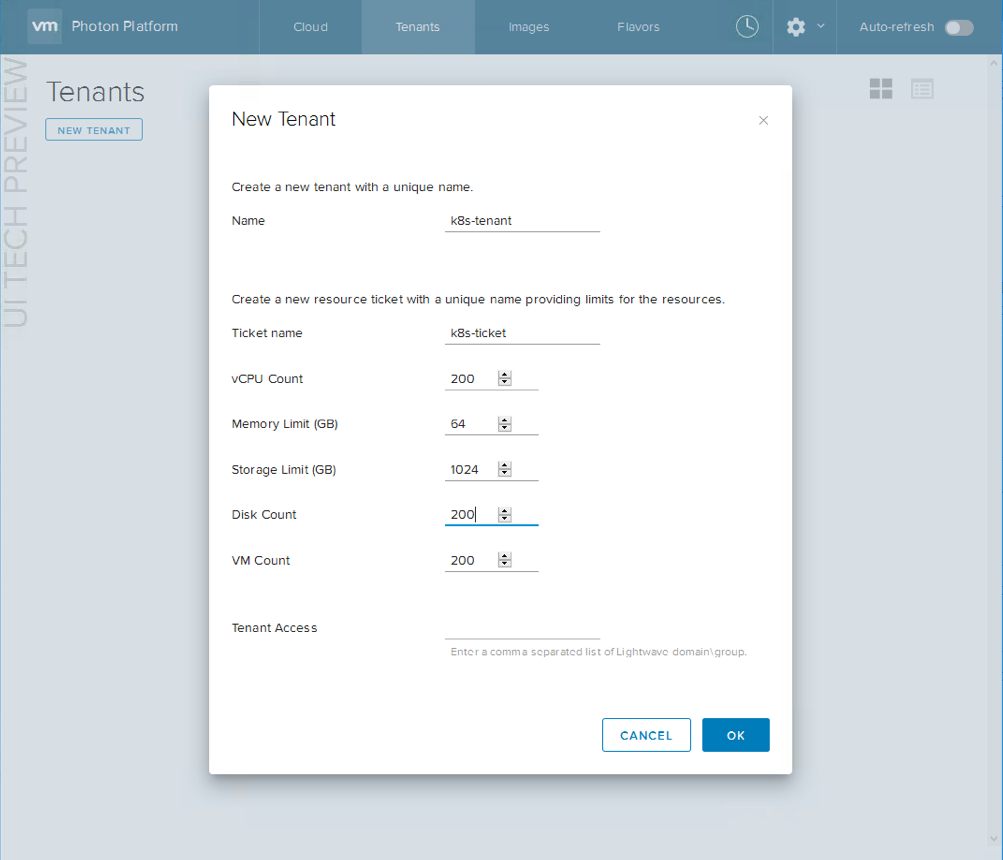

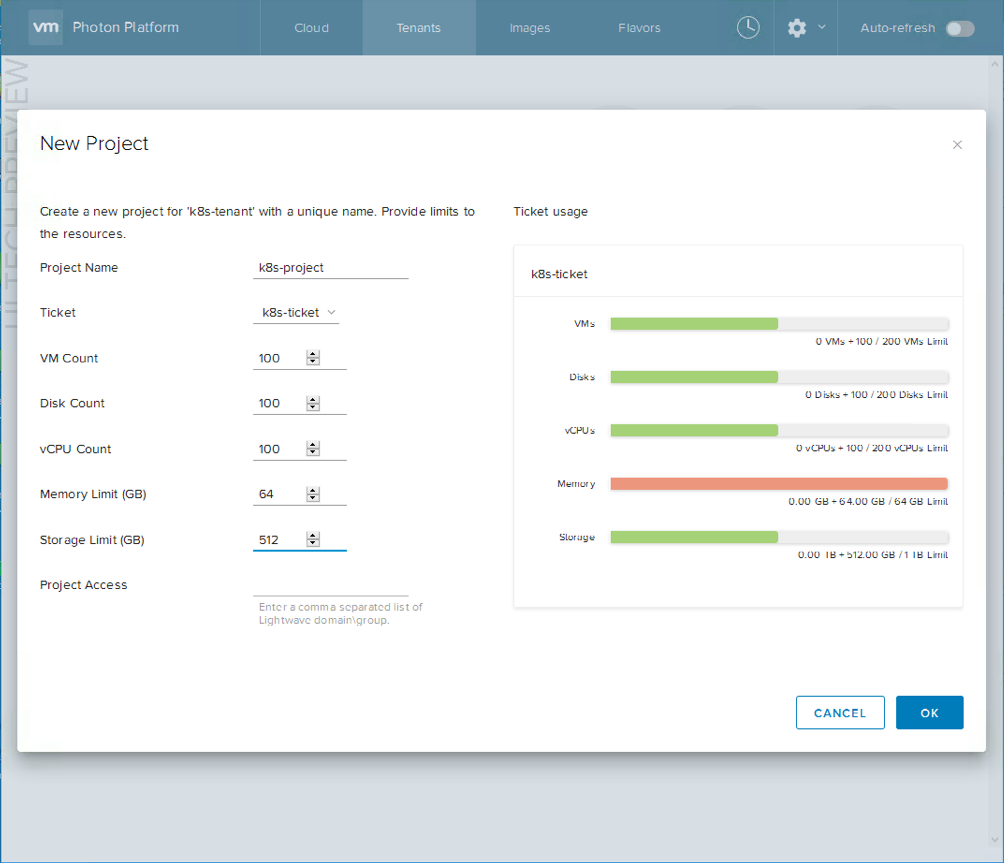

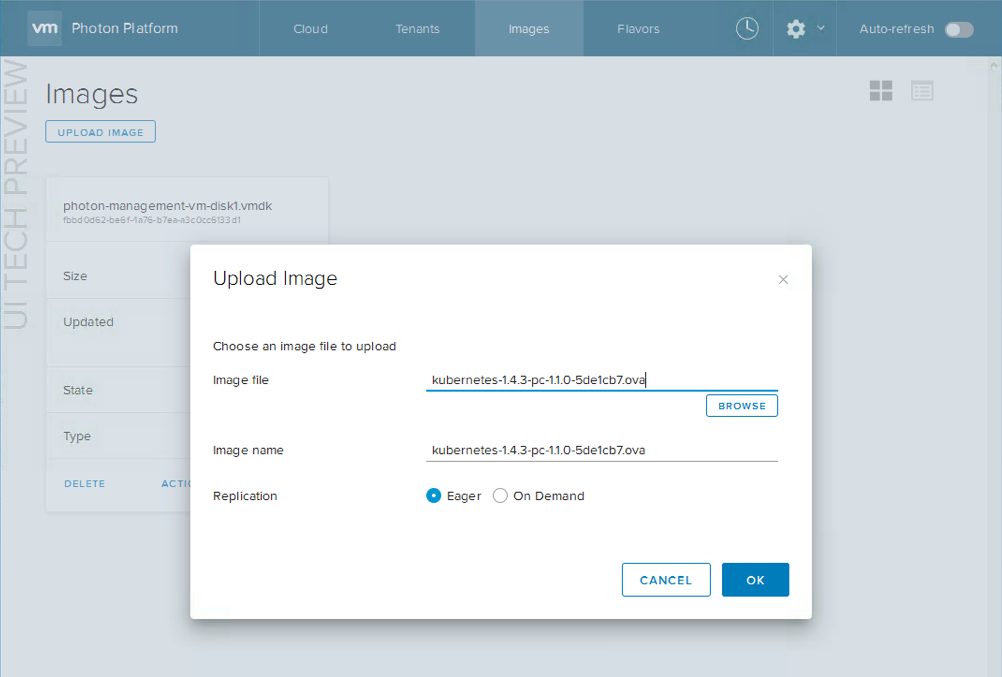

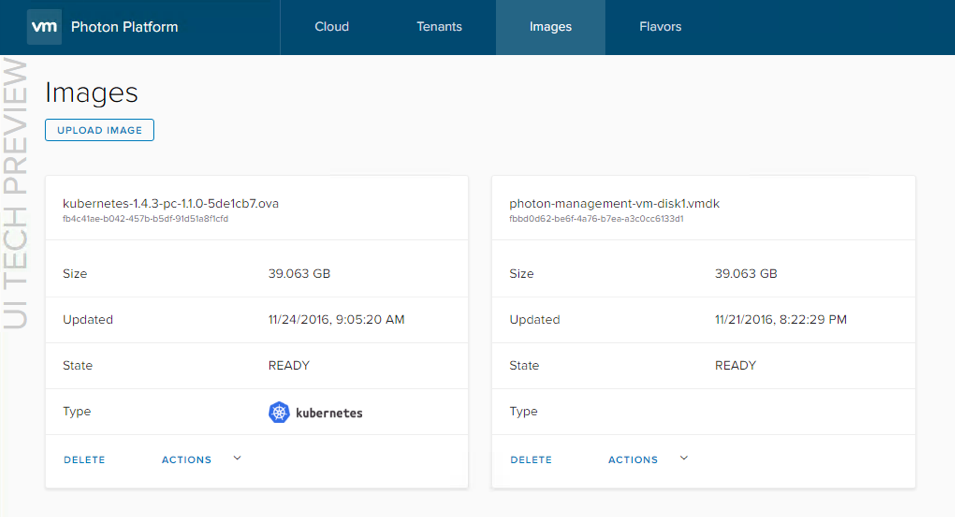

You have to do the usual stuff with Photon Controller, such as creating a tenant, project, image, and so on. I won’t repeat the steps here as they have been covered in multiple posts already, such as this one here on Docker Swarm. The image I am using in this example is Debian 8.2 which you can get from the bintray here. There are some additional steps required for docker-machine, and these are the requirement to have disk and VM flavors. These are the flavors I created:

> photon flavor create -k vm -n DockerFlavor -c

"vm 1.0 COUNT,

vm.flavor.core-100 1.0 COUNT,

vm.cpu 1.0 COUNT,

vm.memory 2.0 GB,

vm.cost 1.0 COUNT"

> photon flavor create -k ephemeral-disk -n DockerDiskFlavor -c

"ephemeral-disk 1.0 COUNT,

ephemeral-disk.flavor.core-100 1.0 COUNT,

ephemeral-disk.cost 1.0 COUNT"

Note the names of the flavors, as we will need to reference these shortly. OK. We’re now ready to create a docker-machine on this photon controller setup.

Step 4 – Setup ENV, get RSA key, create cloud-init.iso

Back on my Ubuntu VM, I need to set a bunch of environment variables that reflect my Photon Controller config. These are what I need to set up:

export PHOTON_DISK_FLAVOR=DockerDiskFlavor

export PHOTON_VM_FLAVOR=DockerFlavor

export PHOTON_PROJECT=a1b993e6-3838-43f7-b4fa-3870cdc0ea76

export PHOTON_ENDPOINT=http://10.27.44.34

export PHOTON_IMAGE=21a0cbf6-5a03-4d2c-919c-ccf6ea9c432b

The Photon Project and the Photon Image both need the ids. Also note that there is no port provided to the endpoint, it is simply the IP address of the photon controller that is provided.

The next part of the config is to decide if you are going to use the default SSH credentials, or create your own. If you wish to use the default, then simply add two new environment variables:

export PHOTON_SSH_USER=docker export PHOTON_SSH_USER_PASSWORD=tcuser

However if you wish to add your own credentials for SSH, first create a public/private RSA key pair. To do that, we use the following command:

$ ssh-keygen -t rsa -b 4096 -C "chogan@vmware.com" Generating public/private rsa key pair. Enter file in which to save the key (/home/cormac/.ssh/id_rsa): Created directory '/home/cormac/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/cormac/.ssh/id_rsa. Your public key has been saved in /home/cormac/.ssh/id_rsa.pub. The key fingerprint is: SHA256:efcA0/SH6mKkrMkGeOjfsdNtct/2Aq01K1nmdOffbz8 chogan@vmware.com The key's randomart image is: +---[RSA 4096]----+ | . | | o . . | | o . o .| | . o . . | | o S o +. | | o o . + o.oB o| | . . ...o.o .X.=.| | . oo=o.+.+.=E+| | ...*. + ..o.+@| +----[SHA256]-----+

This creates a public key in /home/cormac/.ssh/id_rsa.pub. Once this is created, we need to set an environment variable to point to it:

export PHOTON_SSH_KEYPATH=/home/cormac/.ssh/id_rsa

The other place that this information is needed is in a “user-data.txt” file. This is what the user-data.txt file should look like. Simply replace the “ZZZZ” in the ssh-rsa line with the RSA public key that you created in the previous step.

#cloud-config groups: - docker # Configure the Dockermachine user users: - name: docker gecos: Dockermachine primary-group: docker lock-passwd: false passwd: ssh-authorized-keys: - ssh-rsa ZZZZZZZZZZZZZZZZZZZZZ == chogan@vmware.com sudo: ALL=(ALL) NOPASSWD:ALL shell: /bin/bash

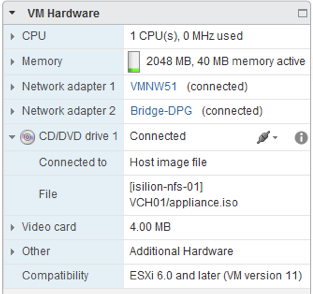

Now that we have the “user-data.txt” file, we are going to create our own ISO image. This ISO image is used for the initial boot of any VM deployed on Photon, and it then picks up the image (in our example the Debian image) to boot the VM. This is why we downloaded the mkisofs tool earlier.

mkisofs -rock -o cloud-init.iso user-data.txt

Now we need to add an additional environmental variable to point to this cloud-init.iso.

export PHOTON_ISO_PATH=/home/cormac/docker-machine/cloud-init.iso

Now if we examine the full set of environment variables for Photon, along with our own SSH and cloud-init.iso, this is what we should see:

PHOTON_DISK_FLAVOR=DockerDiskFlavor PHOTON_VM_FLAVOR=DockerFlavor PHOTON_ISO_PATH=/home/cormac/docker-machine/cloud-init.iso PHOTON_SSH_KEYPATH=/home/cormac/.ssh/id_rsa PHOTON_PROJECT=a1b993e6-3838-43f7-b4fa-3870cdc0ea76 PHOTON_ENDPOINT=http://10.27.44.34 PHOTON_IMAGE=0031278e-f53e-4081-9937-8ccea68c61dd

OK. Everything is in place. We can now use docker-machine to deploy directly to Photon Controller.

Step 5 – Deploy the docker-machine

Now it is just a matter of running docker-machine with the create command and using the -d photon option to specify which plugin to use. As you can see, the ISO is attached to the VM to do the initial boot, and then our Debian image is used for the provisioning:

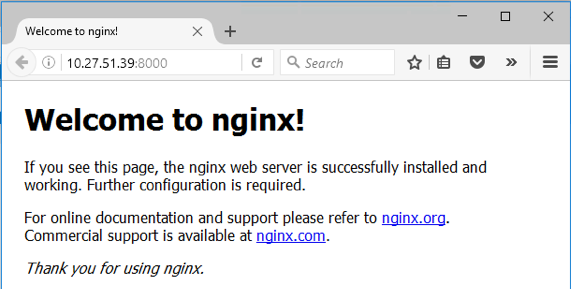

$ docker-machine create -d photon chub004 Running pre-create checks... Creating machine... (chub004) VM was created with Id: af1ea29a-0323-4cbb-8845-37de1123a4b2 (chub004) ISO is attached to VM. (chub004) VM is started. (chub004) VM IP: 10.27.34.29 Waiting for machine to be running, this may take a few minutes... Detecting operating system of created instance... Waiting for SSH to be available... Detecting the provisioner... Provisioning with debian... Copying certs to the local machine directory... Copying certs to the remote machine... Setting Docker configuration on the remote daemon... Checking connection to Docker... Docker is up and running! To see how to connect your Docker Client to the Docker Engine \ running on this virtual machine, run: docker-machine env chub004

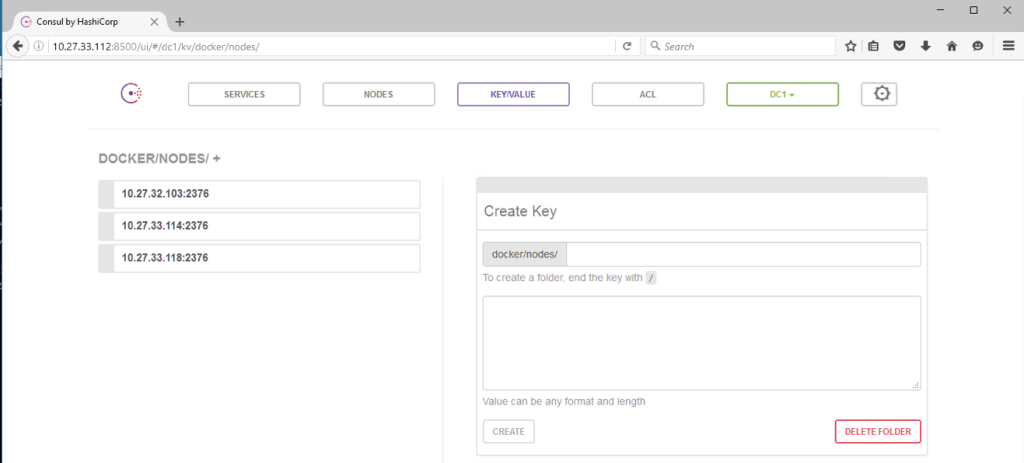

Now you can query the machine:

$ docker-machine env chub004 export DOCKER_TLS_VERIFY="1" export DOCKER_HOST="tcp://10.27.34.29:2376" export DOCKER_CERT_PATH="/home/cormac/.docker/machine/machines/chub004" export DOCKER_MACHINE_NAME="chub004" # Run this command to configure your shell: # eval $(docker-machine env chub004) cormac@cs-dhcp34-25:~$

OK – so we have successfully created the machines. The next step will be to do something useful with this such as create a docker SWARM cluster. Let’s leave that for another post. But hopefully this will show you the versatility of Photon Controller, allowing you to deploy machines using the docker-machine command.

By the way, if you are attending DockerCon in Seattle next week, drop by the VMware booth where docker-machine on Photon Controller will be demoed.

The post docker-machine driver plugin for Photon Controller appeared first on CormacHogan.com.