This is part of a series of articles describing how to use the new features of vSphere Integrated Containers (VIC) v0.4.0. In previous posts, we have looked at deploying your first VCH (Virtual Container Hosts) and container using the docker API. I also showed you how to create some volumes to provide consistent storage for containers. In this post, we shall take a closer look at networking, and what commands are available to do container networking. I will also highlight some areas where there is still work to be done.

This is part of a series of articles describing how to use the new features of vSphere Integrated Containers (VIC) v0.4.0. In previous posts, we have looked at deploying your first VCH (Virtual Container Hosts) and container using the docker API. I also showed you how to create some volumes to provide consistent storage for containers. In this post, we shall take a closer look at networking, and what commands are available to do container networking. I will also highlight some areas where there is still work to be done.

Also, please note that VIC is still not production ready. The aim of these posts is to get you started with VIC, and help you to familiarize yourself with some of the features. Many of the commands and options which work for v0.4.0 may not work in future releases, especially the GA version.

I think the first thing we need to do is to describe the various networks that may be configured when a Virtual Container Host is deployed.

Bridge network

The bridge network identifies a private port group for containers. This is a network used to support container to container communications. IP addresses on this network are managed by the VCH appliance VM and it’s assumed that this network is private and only the containers are attached to it. If this option is omitted from the create command, and the target is an ESXi host, then a regular standard vSwitch will be created with no physical uplinks associated with it. If the network is omitted, and the target is a vCenter server, an error will be displayed as a distributed port group is required and needs to exist in advance of the deployment. This should be dedicated and must not be the same as any management, external or client network.

Management network

The management network identifies the network that the VCH appliance VM should use to connect to the vSphere infrastructure. This must be the same vSphere infrastructure identified in the target parameter. This is also the network over which the VCH appliance VM will receive incoming connections (on port 2377) from the ESXi hosts running the “containers as VMs”. This means that (a) the VCH appliance VM must be able to reach the vSphere API and (b) the ESXi hosts running the container VMs must be able to reach the VCH appliance VM (to support the docker attach call).

External network

The external network. This is a VM portgroup/network on the vSphere environment on which container port forwarding should occur, e.g. docker run –p 8080:80 –d tomcat will expose port 8080 on the VCH appliance VM (that is serving the DOCKER_API) and forward connections from the identified network to the tomcat container. If –-client-network is specified as a different VM network, then attempting to connect to port 8080 on the appliance from the client network will fail. Likewise attempting to connect to the docker API from the external network will also fail. This allows some degree of control over how exposed the dockerAPI is while still exposing ports for application traffic. It defaults to the “VM Network”.

Client network

The client network. This identifies a VM portgroup/network on the vSphere environment that is the network which has access to the DOCKER_API. If not set, It defaults to the same network as the external network.

Default Container network

This is the name of a network that can be used for inter-container communication instead of the bridge network. It must use the name of an existing distributed port group when deploying VIC to a vCenter server target. An alias can be specified, but if not specified the alias is set to the name of the port. The alias is used when specifying the container network DNS, the container network gateway, and a container network ip address range. This allows multiple container networks to be specified. The defaults are 172.16.0.1 for DNS server and gateway and 172.16.0.0/16 for the ip address range. If container network is not specified, the bridge network is used by default.

This network diagram, taken from the official VIC documentation on github, provides a very good overview of the various VIC related networks:

Let’s run some VCH deployment examples with some different network options. First, I will not specify any network options which means that management and client will share the same network as external, which defaults to the VM Network. My VM Network is attached to VLAN 32, and has a DHCP server to provide IP address. Here are the results.

Let’s run some VCH deployment examples with some different network options. First, I will not specify any network options which means that management and client will share the same network as external, which defaults to the VM Network. My VM Network is attached to VLAN 32, and has a DHCP server to provide IP address. Here are the results.

root@photon-NaTv5i8IA [ /workspace/vic ]# ./vic-machine-linux create \ --bridge-network Bridge-DPG \ --image-datastore isilion-nfs-01 \ -t 'administrator@vsphere.local:zzzzzzz@10.27.51.103' \ --compute-resource Mgmt INFO[2016-07-15T09:31:59Z] ### Installing VCH #### . . INFO[2016-07-15T09:32:01Z] Network role client is sharing NIC with external INFO[2016-07-15T09:32:01Z] Network role management is sharing NIC with external . . INFO[2016-07-15T09:32:34Z] Connect to docker: INFO[2016-07-15T09:32:34Z] docker -H 10.27.32.113:2376 --tls info INFO[2016-07-15T09:32:34Z] Installer completed successfully

Now, let deploy my external network on another network. This time it is VM Network “VMNW51”. This is on VLAN 51, which also has a DHCP server to provide addresses. Note once again that the client and external network use the same network as the external network.

root@photon-NaTv5i8IA [ /workspace/vic ]# ./vic-machine-linux create \ --bridge-network Bridge-DPG \ --image-datastore isilion-nfs-01 \ -t 'administrator@vsphere.local:zzzzz@10.27.51.103' \ --compute-resource Mgmt \ --external-network VMNW51" INFO[2016-07-14T14:43:04Z] ### Installing VCH #### . . INFO[2016-07-14T14:43:06Z] Network role management is sharing NIC with client INFO[2016-07-14T14:43:06Z] Network role external is sharing NIC with client . . INFO[2016-07-14T14:43:44Z] Connect to docker: INFO[2016-07-14T14:43:44Z] docker -H 10.27.51.47:2376 --tls info INFO[2016-07-14T14:43:44Z] Installer completed successfully

Now let’s try an example where the external network is on VLAN 32 but the management network is on VLAN 51. Note now that there is not message about management network sharing NIC with client.

root@photon-NaTv5i8IA [ /workspace/vic ]# ./vic-machine-linux create \ --bridge-network Bridge-DPG \ --image-datastore isilion-nfs-01 \ -t 'administrator@vsphere.local:zzzzzzz@10.27.51.103' \ --compute-resource Mgmt \ --management-network "VMNW51" \ --external-network "VM Network" INFO[2016-07-15T09:40:43Z] ### Installing VCH #### . . INFO[2016-07-15T09:40:45Z] Network role client is sharing NIC with external . . INFO[2016-07-15T09:41:24Z] Connect to docker: INFO[2016-07-15T09:41:24Z] docker -H 10.27.33.44:2376 --tls info INFO[2016-07-15T09:41:24Z] Installer completed successfully root@photon-NaTv5i8IA [ /workspace/vic ]#

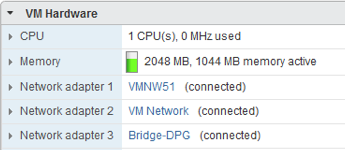

Let’s examine the VCH appliance VM from a vSphere perspective:

So we can see 3 adapters on the VCH – 1 is the external network, 2 is the management network and 3 is the bridge network to access the container network. And finally, just to ensure that we can deploy a container with this network configuration, we will do the following:

So we can see 3 adapters on the VCH – 1 is the external network, 2 is the management network and 3 is the bridge network to access the container network. And finally, just to ensure that we can deploy a container with this network configuration, we will do the following:

root@photon-NaTv5i8IA [ /workspace/vic ]# docker -H 10.27.33.44:2376 --tls \ run -it busybox Unable to find image 'busybox:latest' locally latest: Pulling from library/busybox a3ed95caeb02: Pull complete 8ddc19f16526: Pull complete Digest: sha256:65ce39ce3eb0997074a460adfb568d0b9f0f6a4392d97b6035630c9d7bf92402 Status: Downloaded newer image for library/busybox:latest / #

Now that we have connected to the container running the busybox image, let’s examine its networking:

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq qlen 1000

link/ether 00:50:56:86:18:b6 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.2/16 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fe86:18b6/64 scope link

valid_lft forever preferred_lft forever

/ #

/ # cat /etc/resolv.conf

nameserver 172.16.0.1

/#

/ # netstat -rn

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

0.0.0.0 172.16.0.1 0.0.0.0 UG 0 0 0 eth0

172.16.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

/ #

So we can see that it has been assigned an ip address of 172.16.0.2, and that the DNS server and gateway are set to 172.16.0.1. This is the default container network in VIC.

Alternate Container Network

Let’s now look at creating a completely different container network. To do this, we use some additional vic-machine command line arguments, as shown below:

./vic-machine-linux create --bridge-network Bridge-DPG \ --image-datastore isilion-nfs-01 \ -t 'administrator@vsphere.local:VMware123!@10.27.51.103' \ --compute-resource Mgmt \ --container-network con-nw:con-nw \ --container-network-gateway con-nw:192.168.100.1/16 \ --container-network-dns con-nw:192.168.100.1 \ --container-network-ip-range con-nw:192.168.100.2-100

The first thing to note is that since I am deploying to a vCenter Server target, the container network must be a distributed portgroup. In my case, it is called con-nw, and the parameter –container-network specifies which DPG to use. You also have the option of adding an alias for the network, separated from the DPG with “:”. This alias can then be used in other parts of the command line. If you do not specify an alias, the full name of the DPG must be used in other parts of the command line. In my case, I made the alias the same as the DPG.

[Note: this is basically an external network, so the DNS and gateway, as well as the range of consumable IP addresses for containers must be available through some external means – containers are simply consuming them, and VCH will not provide DHCP or DNS services on this external network]

Other commands are necessary to specify the gateway, DNS server and IP address range for this container network. CIDR, and ranges both work. Note however that the IP address range must not include the IP address for the gateway or DNS server, which is why I have specified a range. Here is the output from running the command:

INFO[2016-07-20T09:06:05Z] ### Installing VCH #### INFO[2016-07-20T09:06:05Z] Generating certificate/key pair - private key in \ ./virtual-container-host-key.pem INFO[2016-07-20T09:06:07Z] Validating supplied configuration INFO[2016-07-20T09:06:07Z] Firewall status: DISABLED on /CNA-DC/host/Mgmt/10.27.51.8 INFO[2016-07-20T09:06:07Z] Firewall configuration OK on hosts: INFO[2016-07-20T09:06:07Z] /CNA-DC/host/Mgmt/10.27.51.8 INFO[2016-07-20T09:06:07Z] License check OK on hosts: INFO[2016-07-20T09:06:07Z] /CNA-DC/host/Mgmt/10.27.51.8 INFO[2016-07-20T09:06:07Z] DRS check OK on: INFO[2016-07-20T09:06:07Z] /CNA-DC/host/Mgmt/Resources INFO[2016-07-20T09:06:08Z] Creating Resource Pool virtual-container-host INFO[2016-07-20T09:06:08Z] Creating appliance on target ERRO[2016-07-20T09:06:08Z] unable to encode []net.IP (slice) for \ guestinfo./container_networks|con-nw/dns: net.IP is an unhandled type INFO[2016-07-20T09:06:08Z] Network role client is sharing NIC with external INFO[2016-07-20T09:06:08Z] Network role management is sharing NIC with external ERRO[2016-07-20T09:06:09Z] unable to encode []net.IP (slice) for \ guestinfo./container_networks|con-nw/dns: net.IP is an unhandled type INFO[2016-07-20T09:06:09Z] Uploading images for container INFO[2016-07-20T09:06:09Z] bootstrap.iso INFO[2016-07-20T09:06:09Z] appliance.iso INFO[2016-07-20T09:06:14Z] Registering VCH as a vSphere extension INFO[2016-07-20T09:06:20Z] Waiting for IP information INFO[2016-07-20T09:06:41Z] Waiting for major appliance components to launch INFO[2016-07-20T09:06:41Z] Initialization of appliance successful INFO[2016-07-20T09:06:41Z] INFO[2016-07-20T09:06:41Z] Log server: INFO[2016-07-20T09:06:41Z] https://10.27.32.116:2378 INFO[2016-07-20T09:06:41Z] INFO[2016-07-20T09:06:41Z] DOCKER_HOST=10.27.32.116:2376 INFO[2016-07-20T09:06:41Z] INFO[2016-07-20T09:06:41Z] Connect to docker: INFO[2016-07-20T09:06:41Z] docker -H 10.27.32.116:2376 --tls info INFO[2016-07-20T09:06:41Z] Installer completed successfully

Ignore the “unable to encode” errors – these will be removed in a future release. Before we create our first container, lets examine the networks:

root@photon-NaTv5i8IA [ /workspace/vic ]# docker -H 10.27.32.116:2376 \ --tls network ls NETWORK ID NAME DRIVER 8627c6f733e8 bridge bridge c23841d4ac24 con-nw external

Run a Container on the Container Network

Now we can run a container (a simple busybox one) and specify our newly created “con-nw”, as shown here:

root@photon-NaTv5i8IA [ /workspace/vic040 ]# docker -H 10.27.32.116:2376\ --tls run -it --net=con-nw busybox Unable to find image 'busybox:latest' locally latest: Pulling from library/busybox a3ed95caeb02: Pull complete 8ddc19f16526: Pull complete Digest: sha256:65ce39ce3eb0997074a460adfb568d0b9f0f6a4392d97b6035630c9d7bf92402 Status: Downloaded newer image for library/busybox:latest / #

Now lets take a look at the networking inside the Container:

/ # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq qlen 1000 link/ether 00:50:56:86:51:bc brd ff:ff:ff:ff:ff:ff inet 192.168.100.2/16 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::250:56ff:fe86:51bc/64 scope link valid_lft forever preferred_lft forever / # netstat -rn Kernel IP routing table Destination Gateway Genmask Flags MSS Window irtt Iface 0.0.0.0 192.168.100.1 0.0.0.0 UG 0 0 0 eth0 192.168.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0 / # cat /etc/resolv.conf nameserver 192.168.100.1 / #

So it looks like all of the container network settings have taken effect. And if we look at this container from a vSphere perspective, we can see it is attached to the con-nw DPG:

This is one of the advantages of VIC – visibility into container network configurations (not just a block-box).

This is one of the advantages of VIC – visibility into container network configurations (not just a block-box).

Some caveats

As I keep mentioning, this product is not yet production ready, but it is getting close. The purpose of these posts is to give you a good experience if you want to try out v0.4.0 right now. With that in mind, there are a few caveats to be aware of with this version.

- Port exposing/Port mapping is not yet working. If you want to run something like a web server type app (e.g. Nginx) in a container and have its ports mapped through the docker API endpoint (which is a popular application to test/demo with) , we cannot do this at the moment.

- You saw the DNS encoding errors in the VCH create flow – these are cosmetic and can be ignored. These will get fixed.

- The gateway CIDR works with /16 but not /24. Stick with a /16 CIDR for the moment for your testing.

The post Container Networks in VIC 0.4.0 appeared first on CormacHogan.com.