I’ve been working very closely with our vSphere Integrated Container (VIC) team here at VMware recently, and am delighted to say that v0.4.0 is now available for download from GitHub. Of course, this is still not supported in production, and is still in tech preview. However for those of you interested, it gives you an opportunity to try it out and see the significant progress made by the team over the last couple of months. You can download it from bintray. This version of VIC is bringing us closer and closer to the original functionality of “Project Bonneville” for running containers as VMs (not in VMs) on vSphere. The docker API endpoint now provides almost identical functionality to running docker anywhere else, although there is still a little bit of work to do. Let’s take a closer look.

What is VIC?

VIC allows customers to run “containers as VMs” in the vSphere infrastructure, rather than “containers in a VM”. It can be deployed directly to a standalone ESXi host, or it can be deployed to vCenter Server. This has some advantages over the “container in a VM” approach which I highlighted here in my post which compared and contrasted VIC with Photon Controller.

VCH Deployment

Simply pull down the zipped archive from bintray, and extract it. I have downloaded it to a folder called /workspace on my Photon OS VM.

root@photon [ /workspace ]# tar zxvf vic_0.4.0.tar.gz

vic/

vic/bootstrap.iso

vic/vic-machine-darwin

vic/appliance.iso

vic/README

vic/LICENSE

vic/vic-machine-windows.exe

vic/vic-machine-linux

As you can see, there is a vic-machine command for Linux, Windows and Darwin (Fusion). Let’s see what the options are for building the VCH – Virtual Container Host.

The “appliance.iso” is used to deploy the VCH, and the “bootstrap.iso” is used for a minimal Linux image to bootstrap the containers before overlaying them with the chosen image. More on this shortly.

root@photon [ /workspace/vic ]# ./vic-machine-linux NAME: vic-machine-linux - Create and manage Virtual Container Hosts USAGE: vic-machine-linux [global options] command [command options] [arguments...] VERSION: 2868-0fcaa7e27730c2b4d8d807f3de19c53670b94477 COMMANDS: create Deploy VCH delete Delete VCH and associated resources inspect Inspect VCH version Show VIC version information GLOBAL OPTIONS: --help, -h show help --version, -v print the version

And to get more info about the “create” option, do the following:

root@photon [ /workspace/vic ]# ./vic-machine-linux create -h

I won’t display the output here. You can see it for yourself when you run the command. Further details on deployment can also be found here in the official docs. In the following create example, I am going to do the following:

- Deploy VCH to a vCenter Server at 10.27.51.103

- I used administrator@vsphere.local as the user, with a password of zzzzzzz

- Use the cluster called Mgmt as the destination Resource Pool for VCH

- Create a resource pool and a VCH (Container Host) with the name VCH01

- The external network (where images will be pulled from by VCH01) is VMNW51

- The bridge network to allow inter-container communication is a distributed port group called Bridge-DPG

- The datastore where container images are to be stored is isilion-nfs-01

- Persistent container volumes will be stored in the folder VIC on isilion-nfs-01 and will be labeled corvols.

Here is the command, and output:

root@photon [ /workspace/vic ]# ./vic-machine-linux create --bridge-network \

Bridge-DPG --image-datastore isilion-nfs-01 \

-t 'administrator@vsphere.local:zzzzzzz@10.27.51.103' \

--compute-resource Mgmt --external-network VMNW51 --name VCH01 \

--volume-store "corvols:isilion-nfs-01/VIC"

INFO[2016-07-14T08:03:02Z] ### Installing VCH ####

INFO[2016-07-14T08:03:02Z] Generating certificate/key pair - private key in ./VCH01-key.pem

INFO[2016-07-14T08:03:03Z] Validating supplied configuration

INFO[2016-07-14T08:03:03Z] Firewall status: DISABLED on /CNA-DC/host/Mgmt/10.27.51.8

INFO[2016-07-14T08:03:03Z] Firewall configuration OK on hosts:

INFO[2016-07-14T08:03:03Z] /CNA-DC/host/Mgmt/10.27.51.8

INFO[2016-07-14T08:03:04Z] License check OK on hosts:

INFO[2016-07-14T08:03:04Z] /CNA-DC/host/Mgmt/10.27.51.8

INFO[2016-07-14T08:03:04Z] DRS check OK on:

INFO[2016-07-14T08:03:04Z] /CNA-DC/host/Mgmt/Resources

INFO[2016-07-14T08:03:04Z] Creating Resource Pool VCH01

INFO[2016-07-14T08:03:04Z] Datastore path is [isilion-nfs-01] VIC

INFO[2016-07-14T08:03:04Z] Creating appliance on target

INFO[2016-07-14T08:03:04Z] Network role client is sharing NIC with external

INFO[2016-07-14T08:03:04Z] Network role management is sharing NIC with external

INFO[2016-07-14T08:03:05Z] Uploading images for container

INFO[2016-07-14T08:03:05Z] bootstrap.iso

INFO[2016-07-14T08:03:05Z] appliance.iso

INFO[2016-07-14T08:03:10Z] Registering VCH as a vSphere extension

INFO[2016-07-14T08:03:16Z] Waiting for IP information

INFO[2016-07-14T08:03:40Z] Waiting for major appliance components to launch

INFO[2016-07-14T08:03:40Z] Initialization of appliance successful

INFO[2016-07-14T08:03:40Z]

INFO[2016-07-14T08:03:40Z] Log server:

INFO[2016-07-14T08:03:40Z] https://10.27.51.40:2378

INFO[2016-07-14T08:03:40Z]

INFO[2016-07-14T08:03:40Z] DOCKER_HOST=10.27.51.40:2376

INFO[2016-07-14T08:03:40Z]

INFO[2016-07-14T08:03:40Z] Connect to docker:

INFO[2016-07-14T08:03:40Z] docker -H 10.27.51.40:2376 --tls info

INFO[2016-07-14T08:03:40Z] Installer completed successfully

root@photon [ /workspace/vic ]#

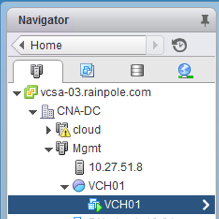

From the last pieces of output, I have the necessary docker API endpoint to allow me to begin creating containers. Let’s look at what has taken place in vCenter at this point. First, we can see the new VCH resource pool and appliance:

Image may be NSFW.

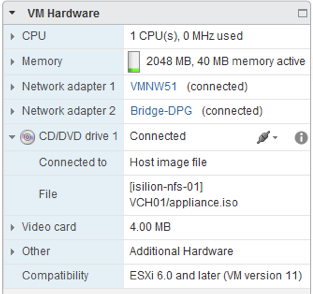

Clik here to view. And next if we examine the virtual hardware of the VCH, we can see how the appliance.iso is utilitized, along with the fact that the VCH has access to the external network (VMNW51) for downloading images from docker repos, and access to the container/bridge network:

And next if we examine the virtual hardware of the VCH, we can see how the appliance.iso is utilitized, along with the fact that the VCH has access to the external network (VMNW51) for downloading images from docker repos, and access to the container/bridge network:

Image may be NSFW.

Clik here to view. Docker Containers

Docker Containers

Image may be NSFW.

Clik here to view. OK – so everything is now in place for us to start creating “containers as VMs” using standard docker commands against the docker endpoint provided by the VCH. Let’s begin with some basic docker query commands such as “info” and “ps”. These can be revisited at any point to get additional details about the state of the containers and images that have been deployed in your vSphere environment. Let’s first display the “info” output immediately followed by the “ps” output.

OK – so everything is now in place for us to start creating “containers as VMs” using standard docker commands against the docker endpoint provided by the VCH. Let’s begin with some basic docker query commands such as “info” and “ps”. These can be revisited at any point to get additional details about the state of the containers and images that have been deployed in your vSphere environment. Let’s first display the “info” output immediately followed by the “ps” output.

root@photon [ /workspace/vic ]# docker -H 10.27.51.40:2376 --tls info

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Storage Driver: vSphere Integrated Containers Backend Engine

vSphere Integrated Containers Backend Engine: RUNNING

Execution Driver: vSphere Integrated Containers Backend Engine

Plugins:

Volume: ds://://@isilion-nfs-01/%5Bisilion-nfs-01%5D%20VIC

Network: bridge

Kernel Version: 4.4.8-esx

Operating System: VMware Photon/Linux

OSType: linux

Architecture: x86_64

CPUs: 1

Total Memory: 1.958 GiB

Name: VCH01

ID: vSphere Integrated Containers

Docker Root Dir:

Debug mode (client): false

Debug mode (server): false

Registry: registry-1.docker.io

WARNING: No memory limit support

WARNING: No swap limit support

WARNING: No kernel memory limit support

WARNING: No cpu cfs quota support

WARNING: No cpu cfs period support

WARNING: No cpu shares support

WARNING: No cpuset support

WARNING: IPv4 forwarding is disabled

WARNING: bridge-nf-call-iptables is disabled

WARNING: bridge-nf-call-ip6tables is disabled

root@photon-NaTv5i8IA [ /workspace/vic ]#

root@photon [ /workspace/vic ]# docker -H 10.27.51.40:2376 --tls ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS\

PORTS NAMES

root@photon [ /workspace/vic ]#

So not a lot going on at the moment. Let’s deploy our very first (simple) container – busybox:

root@photon [ /workspace/vic ]# docker -H 10.27.51.40:2376 --tls run -it busybox Unable to find image 'busybox:latest' locally latest: Pulling from library/busybox a3ed95caeb02: Pull complete 8ddc19f16526: Pull complete Digest: sha256:65ce39ce3eb0997074a460adfb568d0b9f0f6a4392d97b6035630c9d7bf92402 Status: Downloaded newer image for library/busybox:latest / # ls bin etc lib mnt root sbin tmp var dev home lost+found proc run sys usr / # ls /etc group hostname hosts localtime passwd resolv.conf shadow / #

This has dropped me into a shell on the image “busybox”. This is a bit of a simple image, but what it has confirmed is that the VCH was able to pull images from docker, and it has successfully launched a “container as a VM” also.

Congratulations! You have deployed your first container “as a VM”.

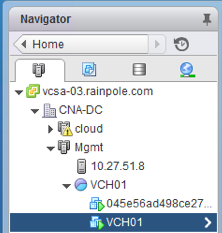

Let’s now go back to vCenter, and examine things from there. The first thing we notice is that in the VCH resource pool, we have our new container in the inventory:

Image may be NSFW.

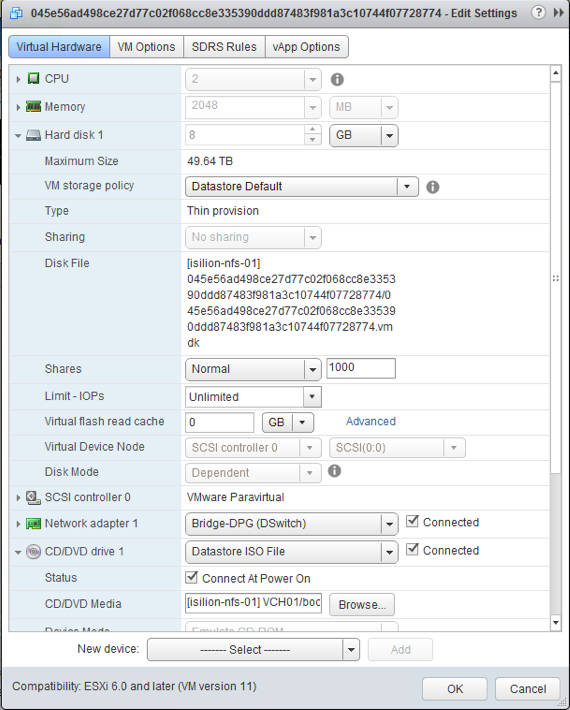

Clik here to view. And now if we examine the virtual hardware of that container, we can find the location of the image on the image datastore, the fact that it is connected to the container/bridge network, and that the CD is connected to the “bootstrap.iso” image that we saw in the VCH folder on initial deployment.

And now if we examine the virtual hardware of that container, we can find the location of the image on the image datastore, the fact that it is connected to the container/bridge network, and that the CD is connected to the “bootstrap.iso” image that we saw in the VCH folder on initial deployment.

Image may be NSFW.

Clik here to view. And now if I return to the photon OS CLI (open a new shell), then I can run additional docker commands such as “ps” to examine the state:

And now if I return to the photon OS CLI (open a new shell), then I can run additional docker commands such as “ps” to examine the state:

root@photon [ /workspace ]# docker -H 10.27.51.40:2376 --tls ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS \ PORTS NAMES 045e56ad498c busybox "sh" 20 minutes ago Running\ ecstatic_meninsky root@photon [ /workspace ]#

And we can see our running container. Now there are a lot of other things that we can do, but this is hopefully enough to get you started with v0.4.0.

Removing VCH

To tidy up, you can follow this procedure. First stop and remove the containers, then remove the VCH:

root@photon [ /workspace/vic ]# docker -H 10.27.51.40:2376 --tls stop 045e56ad498c 045e56ad498c root@photon [ /workspace/vic ]# docker -H 10.27.51.40:2376 --tls rm 045e56ad498c 045e56ad498c root@photon [ /workspace/vic ]# docker -H 10.27.51.40:2376 --tls ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES root@photon [ /workspace/vic ]# ./vic-machine-linux delete \ -t 'administrator@vsphere.local:VMware123!@10.27.51.103' \ --compute-resource Mgmt --name VCH01 INFO[2016-07-14T09:20:55Z] ### Removing VCH #### INFO[2016-07-14T09:20:55Z] Removing VMs INFO[2016-07-14T09:20:55Z] Removing images INFO[2016-07-14T09:20:55Z] Removing volumes INFO[2016-07-14T09:20:56Z] Removing appliance VM network devices INFO[2016-07-14T09:20:58Z] Removing VCH vSphere extension INFO[2016-07-14T09:21:02Z] Removing Resource Pool VCH01 INFO[2016-07-14T09:21:02Z] Completed successfully root@photon [ /workspace/vic ]#

For more details on using vSphere Integrated Containers v0.4.0 see the user guide on github here and command usage guide on github here.

And if you are coming to VMworld 2016, you should definitely check out the various sessions, labs and demos on Cloud Native Apps (CNA).

The post Getting Started with vSphere Integrated Containers v0.4.0 appeared first on CormacHogan.com.